Filters-Instagram

This notebook includes both coding and written questions. Please hand in this notebook file with all the outputs and your answers to the written questions.

1 | # Setup |

2 | import numpy as np |

3 | import matplotlib.pyplot as plt |

4 | from time import time |

5 | #from skimage import io |

6 | |

7 | from __future__ import print_function |

8 | |

9 | %matplotlib inline |

10 | plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots |

11 | plt.rcParams['image.interpolation'] = 'nearest' |

12 | plt.rcParams['image.cmap'] = 'gray' |

13 | |

14 | # for auto-reloading extenrnal modules |

15 | %load_ext autoreload |

16 | %autoreload 2 |

Convolutions

@(Commutative Property)

Recall that the convolution of an image and a kernel is defined as follows:

将式中积分变量 i 和 j 置换为 m−x 和 n−y,即可证明

@(Linear and Shift Invariance)

Let be a function . Consider a system , where with some kernel . Show that defined by any kernel is a Linear Shift Invariant (LSI) system. In other words, for any , show that satisfies both of the following:

向量化

向量化可以提高计算效率,实现更快的卷积计算。

向量化的思路就是:

- 将卷积核进行向量化

- 将迭代过程中的计算块(计算中心及其领域)进行向量化,由于运算的对象都是核,此处可以将向量化的计算块拼成矩阵

- 两者进行内积计算,并reshape成原有图像大小

Cross-correlation

Cross-correlation of two 2D signals and is defined as follows:

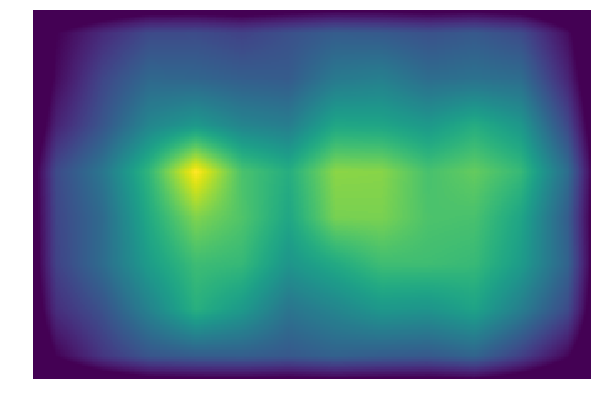

Template Matching with Cross-correlation

使用互相关性实现模板匹配

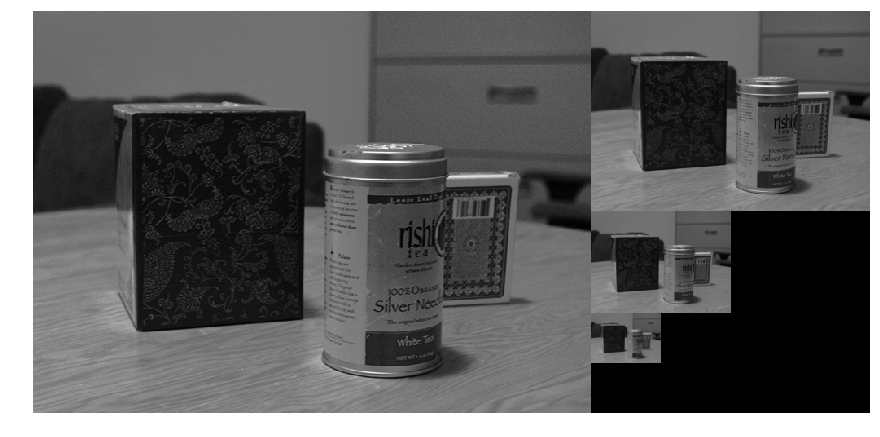

Suppose that you are a clerk at a grocery store. One of your responsibilites is to check the shelves periodically and stock them up whenever there are sold-out items. You got tired of this laborious task and decided to build a computer vision system that keeps track of the items on the shelf.

Luckily, you have learned in CS131 that cross-correlation can be used for template matching: a template is multiplied with regions of a larger image to measure how similar each region is to the template.

The template of a product (template.jpg) and the image of shelf (shelf.jpg) is provided. We will use cross-correlation to find the product in the shelf.

Implement cross_correlation function in filters.py and run the code below.Hint: you may use the conv_fast function you implemented in the previous question.

1 | from filters import cross_correlation |

2 | |

3 | # Load template and image in grayscale |

4 | img = io.imread('shelf.jpg') |

5 | img_grey = io.imread('shelf.jpg', as_grey=True) |

6 | temp = io.imread('template.jpg') |

7 | temp_grey = io.imread('template.jpg', as_grey=True) |

8 | |

9 | # Perform cross-correlation between the image and the template |

10 | out = cross_correlation(img_grey, temp_grey) |

11 | |

12 | # Find the location with maximum similarity |

13 | y,x = (np.unravel_index(out.argmax(), out.shape)) |

14 | |

15 | # Display product template |

16 | plt.figure(figsize=(25,20)) |

17 | plt.subplot(3, 1, 1) |

18 | plt.imshow(temp) |

19 | plt.title('Template') |

20 | plt.axis('off') |

21 | |

22 | # Display cross-correlation output |

23 | plt.subplot(3, 1, 2) |

24 | plt.imshow(out) |

25 | plt.title('Cross-correlation (white means more correlated)') |

26 | plt.axis('off') |

27 | |

28 | # Display image |

29 | plt.subplot(3, 1, 3) |

30 | plt.imshow(img) |

31 | plt.title('Result (blue marker on the detected location)') |

32 | plt.axis('off') |

33 | |

34 | # Draw marker at detected location |

35 | plt.plot(x, y, 'bx', ms=40, mew=10) |

36 | plt.show() |

Interpretation

How does the output of cross-correlation filter look like? Was it able to detect the product correctly? Explain what might be the problem with using raw template as a filter.

Zero-mean cross-correlation

A solution to this problem is to subtract off the mean value of the template so that it has zero mean.

Implement zero_mean_cross_correlation function in filters.py and run the code below.

Normalized Cross-correlation

One day the light near the shelf goes out and the product tracker starts to malfunction. The zero_mean_cross_correlation is not robust to change in lighting condition. The code below demonstrates this.

A solution is to normalize the pixels of the image and template at every step before comparing them. This is called normalized cross-correlation.

The mathematical definition for normalized cross-correlation of and template is:

where

is the patch image at position (m,n)

is the mean of the patch image

is the standard deviation of the patch image

is the mean of the template

is the standard deviation of the template

不可以直接对整幅待匹配的图做归一化操作。

正确的图像区域,如果是做整幅图的归一化,明显受到了其他区域诸如亮度颜色等的影响,干扰了匹配。

应该对每个待匹配块做归一化操作,然后比较匹配程度。

Separable Filters

Consider a image I and a filter F. A filter F is separable if it can be written as a product of two 1D filters: .

For example,

can be written as a matrix product of

Therefore is a separable filter.

For any separable filter

Complexity comparison

- 乘法操作的次数为

- 乘法操作的次数为

Edges-Smart Car Lane Detection

Canny Edge Detector

In this part, you are going to implment Canny edge detector. The Canny edge detection algorithm can be broken down in to five steps:

- Smoothing:平滑处理(去躁)

- Finding gradients:寻找梯度

- Non-maximum suppression:非极大值抑制

- Double thresholding:双阈值算法

- Edge tracking by hysteresis:滞后边缘追踪

Smoothing

We first smooth the input image by convolving it with a Gaussian kernel. The equation for a Gaussian kernel of size (2k+1)×(2k+1) is given by:

Implement gaussian_kernel in edge.py and run the code below.

1 | from edge import conv, gaussian_kernel |

2 | |

3 | # Define 3x3 Gaussian kernel with std = 1 |

4 | kernel = gaussian_kernel(3, 1) |

5 | kernel_test = np.array( |

6 | [[ 0.05854983, 0.09653235, 0.05854983], |

7 | [ 0.09653235, 0.15915494, 0.09653235], |

8 | [ 0.05854983, 0.09653235, 0.05854983]] |

9 | ) |

10 | # print(kernel) |

11 | |

12 | # Test Gaussian kernel |

13 | if not np.allclose(kernel, kernel_test): |

14 | print('Incorrect values! Please check your implementation.') |

需要先实现edge.py中的conv卷积函数,可以使用作业hw1中的conv_nested,conv_fast,conv_faster等函数

1 | # Test with different kernel_size and sigma |

2 | kernel_size = 5 |

3 | sigma = 1.4 |

4 | |

5 | # Load image |

6 | img = io.imread('iguana.png', as_grey=True) |

7 | |

8 | # Define 5x5 Gaussian kernel with std = sigma |

9 | kernel = gaussian_kernel(kernel_size, sigma) |

10 | |

11 | # Convolve image with kernel to achieve smoothed effect |

12 | smoothed = conv(img, kernel) |

13 | |

14 | plt.subplot(1,2,1) |

15 | plt.imshow(img) |

16 | plt.title('Original image') |

17 | plt.axis('off') |

18 | |

19 | plt.subplot(1,2,2) |

20 | plt.imshow(smoothed) |

21 | plt.title('Smoothed image') |

22 | plt.axis('off') |

23 | |

24 | plt.show() |

What is the effect of the kernel_size and sigma?

高斯滤波器用像素邻域的加权均值来代替该点的像素值,而每一邻域像素点权值是随该点与中心点的距离单调增减的,因为边缘是一种图像局部特征,如果平滑运算对离算子中心很远的像素点仍然有很大作用,则平滑运算会使图像失真。

高斯滤波器宽度(决定着平滑程度)是由参数σ表征的,而且σ和平滑程度的关系是非常简单的.σ越大,高斯滤波器的频带就越宽,平滑程度就越好.通过调节平滑程度参数σ,可在图像特征过分模糊(过平滑)与平滑图像中由于噪声和细纹理所引起的过多的不希望突变量(欠平滑)之间取得折衷。

Finding gradients

The gradient of a 2D scalar function in Cartesian coordinate is defined by:

Note that the partial derivatives can be computed by convolving the image with some appropriate kernels and :

Find the kernels and and implement partial_x and partial_y using conv defined in edge.py.

-Hint: Remeber that convolution flips the kernel.

1 | from edge import partial_x, partial_y |

2 | |

3 | # Test input |

4 | I = np.array( |

5 | [[0, 0, 0], |

6 | [0, 1, 0], |

7 | [0, 0, 0]] |

8 | ) |

9 | |

10 | # Expected outputs |

11 | I_x_test = np.array( |

12 | [[ 0, 0, 0], |

13 | [ 0.5, 0, -0.5], |

14 | [ 0, 0, 0]] |

15 | ) |

16 | |

17 | I_y_test = np.array( |

18 | [[ 0, 0.5, 0], |

19 | [ 0, 0, 0], |

20 | [ 0, -0.5, 0]] |

21 | ) |

22 | |

23 | # Compute partial derivatives |

24 | I_x = partial_x(I) |

25 | I_y = partial_y(I) |

26 | |

27 | # Test correctness of partial_x and partial_y |

28 | if not np.all(I_x == I_x_test): |

29 | print('partial_x incorrect') |

30 | |

31 | if not np.all(I_y == I_y_test): |

32 | print('partial_y incorrect') |

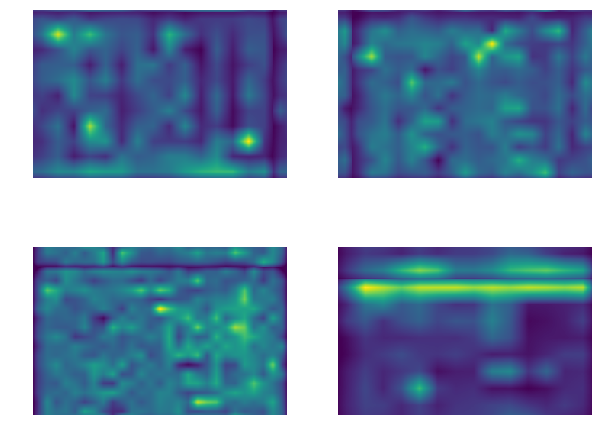

1 | # Compute partial derivatives of smoothed image |

2 | Gx = partial_x(smoothed) |

3 | Gy = partial_y(smoothed) |

4 | |

5 | plt.subplot(1,2,1) |

6 | plt.imshow(Gx) |

7 | plt.title('Derivative in x direction') |

8 | plt.axis('off') |

9 | |

10 | plt.subplot(1,2,2) |

11 | plt.imshow(Gy) |

12 | plt.title('Derivative in y direction') |

13 | plt.axis('off') |

14 | |

15 | plt.show() |

What is the reason for performing smoothing prior to computing the gradients?

平滑是为了去噪,噪声会使得计算梯度时得到幅度较大的异常值。

Now, we can compute the magnitude and direction of gradient with the two partial derivatives:

Implement gradient in

edge.pywhich takes in an image and outputs and .

1 | from edge import gradient |

2 | |

3 | G, theta = gradient(smoothed) |

4 | |

5 | if not np.all(G >= 0): |

6 | print('Magnitude of gradients should be non-negative.') |

7 | |

8 | if not np.all((theta >= 0) * (theta < 360)): |

9 | print('Direction of gradients should be in range 0 <= theta < 360') |

10 | |

11 | plt.imshow(G) |

12 | plt.title('Gradient magnitude') |

13 | plt.axis('off') |

14 | plt.show() |

Non-maximum suppression

You should be able to note that the edges extracted from the gradient of the smoothed image is quite thick and blurry. The purpose of this step is to convert the “blurred” edges into “sharp” edges. Basically, this is done by preserving all local maxima in the gradient image and discarding everything else. The algorithm is for each pixel in the gradient image:

- Round the gradient direction to the nearest 45 degrees, corresponding to the use of an 8-connected neighbourhood.

当然这种做法其实是简化版本的,虽然Canny的论文上是这么写的,但是后来也有提出,梯度肯定不止这四个,针对别的梯度方向的比较,使用插值等方法来进行非极大值抑制. Canny算子中的非极大值抑制(Non-Maximum Suppression)分析

- Compare the edge strength of the current pixel with the edge strength of the pixel in the positive and negative gradient direction. For example, if the gradient direction is south (theta=90), compare with the pixels to the north and south.

- If the edge strength of the current pixel is the largest; preserve the value of the edge strength. If not, suppress (i.e. remove) the value.

非极大值抑制属于一种边缘细化的方法,梯度大的位置有可能为边缘,在这些位置沿着梯度方向,找到像素点的局部最大值,并将其非最大值抑制。

形象的说就是一条粗粗的区域都根据梯度强度判断成了边缘,但目标只需要一条细细的边,这个时候就找区域内的最大的那条作为边(注意是沿着梯度方向),其他的或抛弃或作为备选区域

1 | from edge import non_maximum_suppression |

2 | |

3 | nms = non_maximum_suppression(G, theta) |

4 | plt.imshow(nms) |

5 | plt.title('Non-maximum suppressed') |

6 | plt.axis('off') |

7 | plt.show() |

Double Thresholding

The edge-pixels remaining after the non-maximum suppression step are (still) marked with their strength pixel-by-pixel. Many of these will probably be true edges in the image, but some may be caused by noise or color variations, for instance, due to rough surfaces. The simplest way to discern between these would be to use a threshold, so that only edges stronger that a certain value would be preserved. The Canny edge detection algorithm uses double thresholding. Edge pixels stronger than the high threshold are marked as strong; edge pixels weaker than the low threshold are suppressed and edge pixels between the two thresholds are marked as weak.

Implement double_thresholding in edge.py

双阀值方法,设置一个maxval,以及minval,梯度大于maxval则为强边缘,梯度值介于maxval与minval则为弱边缘点,小于minval为抑制点。

双阈值的结果在第五步可以用来作为边缘追踪的依据。

1 | from edge import double_thresholding |

2 | |

3 | low_threshold = 0.02 |

4 | high_threshold = 0.03 |

5 | |

6 | strong_edges, weak_edges = double_thresholding(nms, high_threshold, low_threshold) |

7 | assert(np.sum(strong_edges & weak_edges) == 0) |

8 | |

9 | edges=strong_edges * 1.0 + weak_edges * 0.5 |

10 | |

11 | plt.subplot(1,2,1) |

12 | plt.imshow(strong_edges) |

13 | plt.title('Strong Edges') |

14 | plt.axis('off') |

15 | |

16 | plt.subplot(1,2,2) |

17 | plt.imshow(edges) |

18 | plt.title('Strong+Weak Edges') |

19 | plt.axis('off') |

20 | |

21 | plt.show() |

Edge tracking

Strong edges are interpreted as “certain edges”, and can immediately be included in the final edge image. Weak edges are included if and only if they are connected to strong edges. The logic is of course that noise and other small variations are unlikely to result in a strong edge (with proper adjustment of the threshold levels). Thus strong edges will (almost) only be due to true edges in the original image. The weak edges can either be due to true edges or noise/color variations. The latter type will probably be distributed in dependently of edges on the entire image, and thus only a small amount will be located adjacent to strong edges. Weak edges due to true edges are much more likely to be connected directly to strong edges.

Implement link_edges in edge.py

由于边缘是连续的,因此可以认为弱边缘如果为真实边缘则和强边缘是联通的,可由此判断其是否为真实边缘。

Canny edge detector

Implement canny in edge.py using the functions you have implemented so far. Test edge detector with different parameters.

1 | from edge import canny |

2 | |

3 | # Load image |

4 | img = io.imread('iguana.png', as_grey=True) |

5 | |

6 | # Run Canny edge detector |

7 | edges = canny(img, kernel_size=5, sigma=1.4, high=0.04, low=0.02) |

8 | print (edges.shape) |

9 | plt.imshow(edges) |

10 | plt.axis('off') |

11 | plt.show() |

Suppose that the Canny edge detector successfully detects an edge in an image. The edge is then rotated by , where the relationship between a point on the original edge and a point on the rotated edge is defined as

Will the rotated edge be detected using the same Canny edge detector? Provide either a mathematical proof or a counter example.

-Hint: The detection of an edge by the Canny edge detector depends only on the magnitude of its derivative. The derivative at point is determined by its components along the and directions. Think about how these magnitudes have changed because of the rotation.

幅度没变,所以边缘检测不变。

After running the Canny edge detector on an image, you notice that long edges are broken into short segments separated by gaps. In addition, some spurious edges appear. For each of the two thresholds (low and high) used in hysteresis thresholding, explain how you would adjust the threshold (up or down) to address both problems. Assume that a setting exists for the two thresholds that produces the desired result. Briefly explain your answer.

调参方法:

如果长直线被断成小段,说明weak_edges的阈值太大,weak_edges的数量较少,此时应当调低weak_edges的阈值。

如果spurious (伪直线)出现,说明strong_edges的阈值太小,strong_edges的数量较多,此时应当调高strong_edges的阈值。

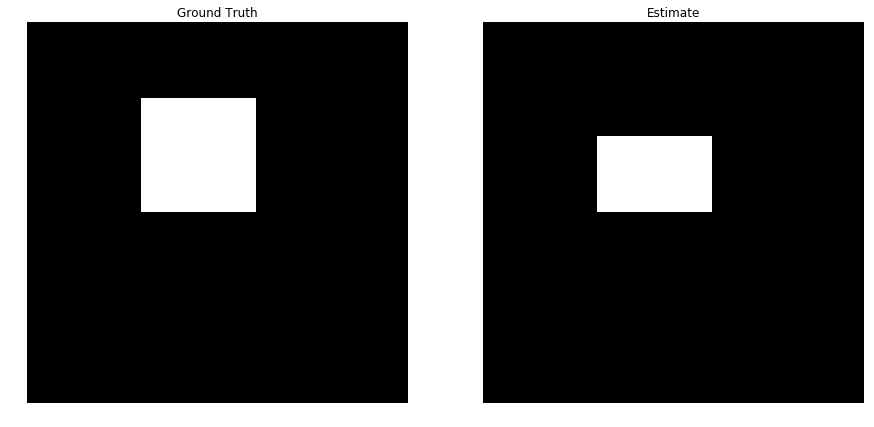

Extra Credit: Optimizing Edge Detector

One way of evaluating an edge detector is to compare detected edges with manually specified ground truth edges. Here, we use precision, recall and F1 score as evaluation metrics. We provide you 40 images of objects with ground truth edge annotations. Run the code below to compute precision, recall and F1 score over the entire set of images. Then, tweak the parameters of the Canny edge detector to get as high F1 score as possible. You should be able to achieve F1 score higher than 0.31 by carefully setting the parameters.

1 | from os import listdir |

2 | from itertools import product |

3 | |

4 | # Define parameters to test |

5 | sigmas = [1.6, 1.7, 1.8, 1.9, 2.0] |

6 | highs = [0.04, 0.05, 0.06] |

7 | lows = [0.015, 0.02, 0.025] |

8 | |

9 | for sigma, high, low in product(sigmas, highs, lows): |

10 | |

11 | print("sigma={}, high={}, low={}".format(sigma, high, low)) |

12 | n_detected = 0.0 |

13 | n_gt = 0.0 |

14 | n_correct = 0.0 |

15 | |

16 | for img_file in listdir('images/objects'): |

17 | img = io.imread('images/objects/'+img_file, as_grey=True) |

18 | gt = io.imread('images/gt/'+img_file+'.gtf.pgm', as_grey=True) |

19 | |

20 | mask = (gt != 5) # 'don't' care region |

21 | gt = (gt == 0) # binary image of GT edges |

22 | |

23 | edges = canny(img, kernel_size=5, sigma=sigma, high=high, low=low) |

24 | edges = edges * mask |

25 | |

26 | n_detected += np.sum(edges) |

27 | n_gt += np.sum(gt) |

28 | n_correct += np.sum(edges * gt) |

29 | |

30 | p_total = n_correct / n_detected |

31 | r_total = n_correct / n_gt |

32 | f1 = 2 * (p_total * r_total) / (p_total + r_total) |

33 | print('Total precision={:.4f}, Total recall={:.4f}'.format(p_total, r_total)) |

34 | print('F1 score={:.4f}'.format(f1)) |

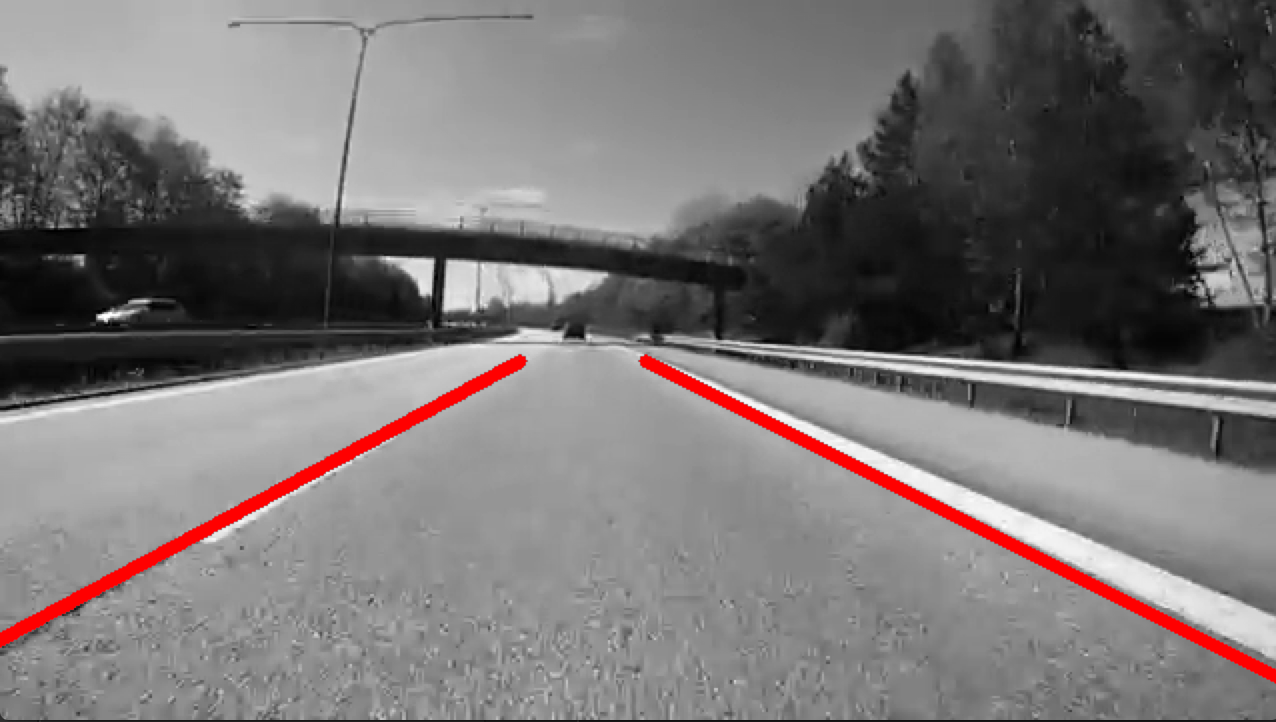

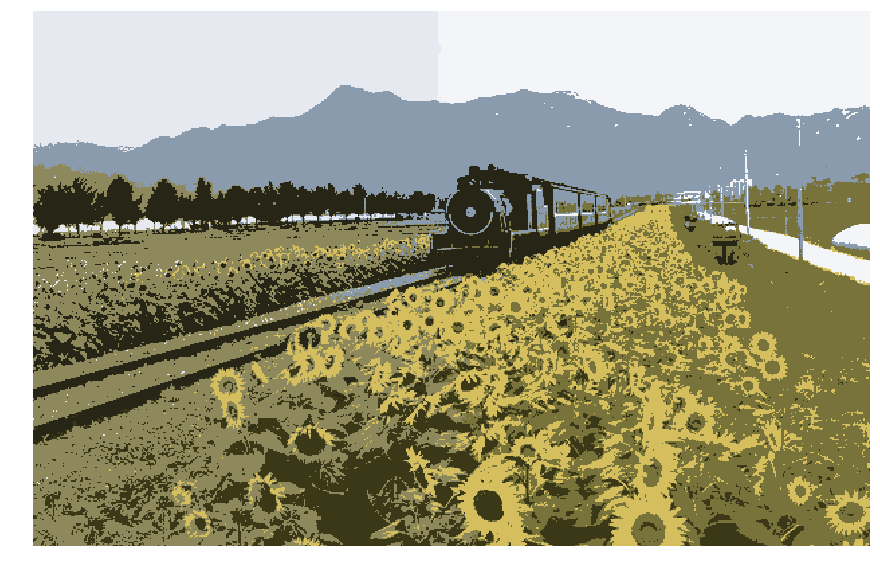

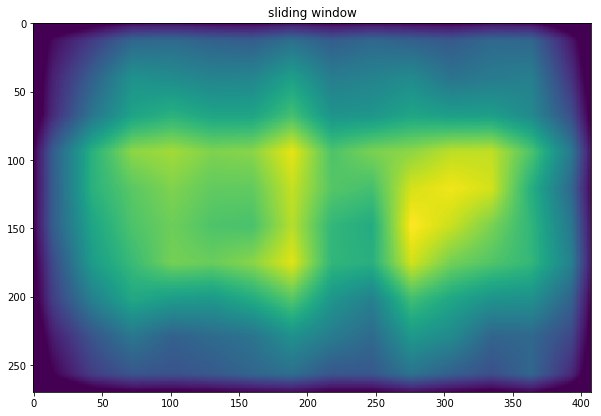

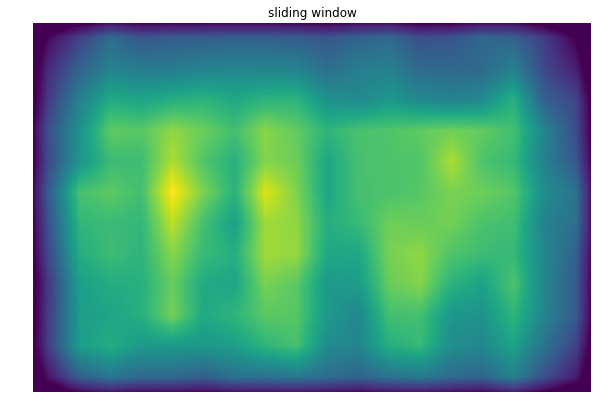

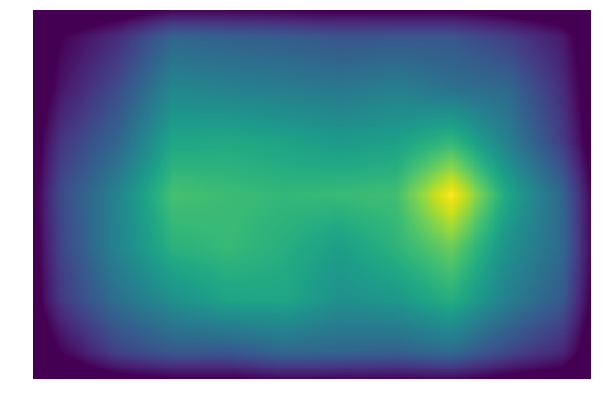

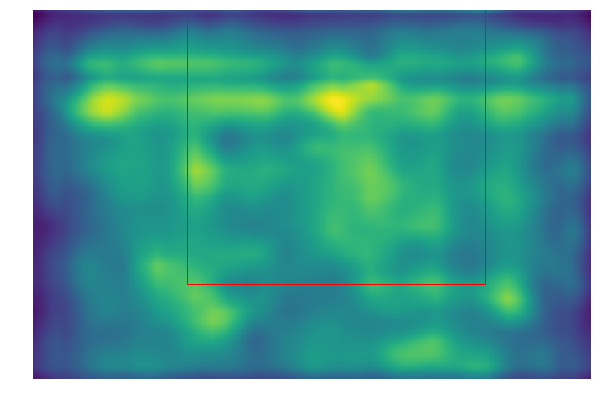

Lane Detection

In this section we will implement a simple lane detection application using Canny edge detector and Hough transform.

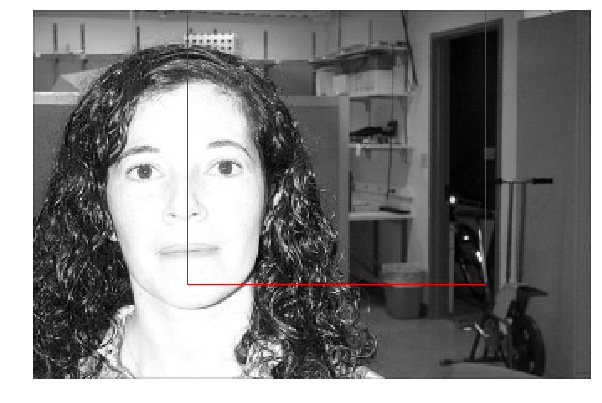

Here are some example images of how your final lane detector will look like.

The algorithm can broken down into the following steps:

- Detect edges using the Canny edge detector.

- Extract the edges in the region of interest (a triangle covering the bottom corners and the center of the image).

- Run Hough transform to detect lanes.

Edge detection

Lanes on the roads are usually thin and long lines with bright colors. Our edge detection algorithm by itself should be able to find the lanes pretty well. Run the code cell below to load the example image and detect edges from the image.

1 | from edge import canny |

2 | |

3 | # Load image |

4 | img = io.imread('road.jpg', as_grey=True) |

5 | |

6 | # Run Canny edge detector |

7 | edges = canny(img, kernel_size=5, sigma=1.4, high=0.03, low=0.008) |

8 | |

9 | |

10 | plt.subplot(211) |

11 | plt.imshow(img) |

12 | plt.axis('off') |

13 | plt.title('Input Image') |

14 | |

15 | plt.subplot(212) |

16 | plt.imshow(edges) |

17 | plt.axis('off') |

18 | plt.title('Edges') |

19 | plt.show() |

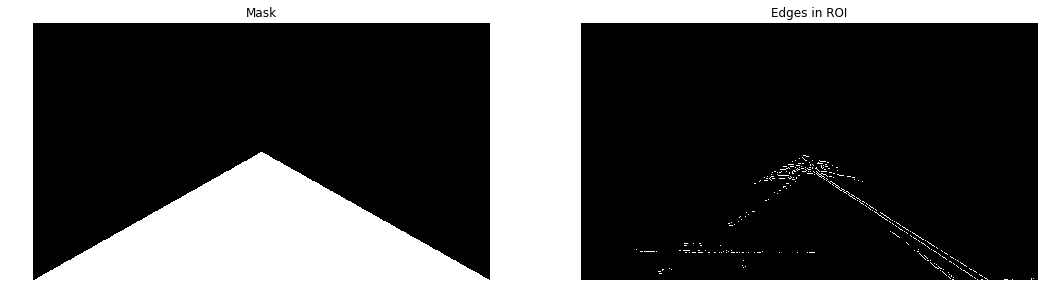

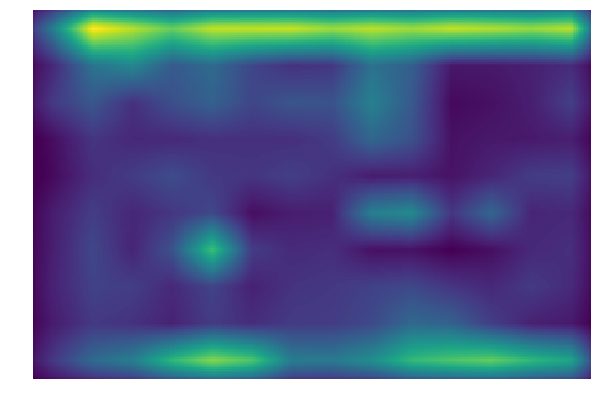

Extracting region of interest (ROI)

We can see that the Canny edge detector could find the edges of the lanes. However, we can also see that there are edges of other objects that we are not interested in. Given the position and orientation of the camera, we know that the lanes will be located in the lower half of the image. The code below defines a binary mask for the ROI and extract the edges within the region.

1 | H, W = img.shape |

2 | |

3 | # Generate mask for ROI (Region of Interest) |

4 | mask = np.zeros((H, W)) |

5 | for i in range(H): |

6 | for j in range(W): |

7 | if i > (H / W) * j and i > -(H / W) * j + H: |

8 | mask[i, j] = 1 |

9 | |

10 | # Extract edges in ROI |

11 | roi = edges * mask |

12 | |

13 | plt.subplot(1,2,1) |

14 | plt.imshow(mask) |

15 | plt.title('Mask') |

16 | plt.axis('off') |

17 | |

18 | plt.subplot(1,2,2) |

19 | plt.imshow(roi) |

20 | plt.title('Edges in ROI') |

21 | plt.axis('off') |

22 | plt.show() |

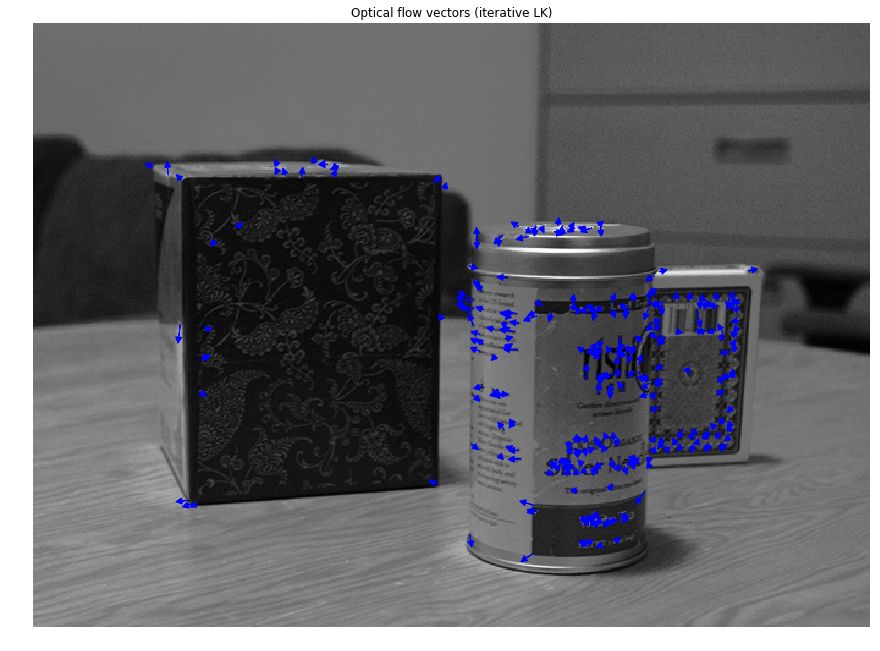

Fitting lines using Hough transform

The output from the edge detector is still a collection of connected points. However, it would be more natural to represent a lane as a line parameterized as , with a slope and -intercept . We will use Hough transform to find parameterized lines that represent the detected edges.

In general, a straight line can be represented as a point in the parameter space. However, this cannot represent vertical lines as the slope parameter will be unbounded. Alternatively, we parameterize a line using and as follows:

Using this parameterization, we can map everypoint in -space to a sine-like line in -space (or Hough space). We then accumulate the parameterized points in the Hough space and choose points (in Hough space) with highest accumulated values. A point in Hough space then can be transformed back into a line in -space.

See lectures on Hough transform.

Implement hough_transform in edge.py.

1 | from edge import hough_transform |

2 | |

3 | # Perform Hough transform on the ROI |

4 | acc, rhos, thetas = hough_transform(roi) |

5 | |

6 | # Coordinates for right lane |

7 | xs_right = [] |

8 | ys_right = [] |

9 | |

10 | # Coordinates for left lane |

11 | xs_left = [] |

12 | ys_left = [] |

13 | |

14 | for i in range(20): |

15 | idx = np.argmax(acc) |

16 | r_idx = idx // acc.shape[1] |

17 | t_idx = idx % acc.shape[1] |

18 | acc[r_idx, t_idx] = 0 # Zero out the max value in accumulator |

19 | |

20 | rho = rhos[r_idx] |

21 | theta = thetas[t_idx] |

22 | |

23 | # Transform a point in Hough space to a line in xy-space. |

24 | a = - (np.cos(theta)/np.sin(theta)) # slope of the line |

25 | b = (rho/np.sin(theta)) # y-intersect of the line |

26 | |

27 | # Break if both right and left lanes are detected |

28 | if xs_right and xs_left: |

29 | break |

30 | |

31 | if a < 0: # Left lane |

32 | if xs_left: |

33 | continue |

34 | xs = xs_left |

35 | ys = ys_left |

36 | else: # Right Lane |

37 | if xs_right: |

38 | continue |

39 | xs = xs_right |

40 | ys = ys_right |

41 | |

42 | for x in range(img.shape[1]): |

43 | y = a * x + b |

44 | if y > img.shape[0] * 0.6 and y < img.shape[0]: |

45 | xs.append(x) |

46 | ys.append(int(round(y))) |

47 | |

48 | plt.imshow(img) |

49 | plt.plot(xs_left, ys_left, linewidth=5.0) |

50 | plt.plot(xs_right, ys_right, linewidth=5.0) |

51 | plt.axis('off') |

52 | plt.show() |

之所以只检测出一条直线,是因为上面的边缘检测部分不是很完美,尤其是左边车道线的边缘检测出现了问题,才会导致左边车道线做霍夫变换也得不到理想的结果

Panorama-ImageStiching

This assignment covers Harris corner detector(角点检测器), RANSAC(随机抽样一致) and HOG descriptor(HOG描述子).

Panorama stitching is an early success of computer vision. Matthew Brown and David G. Lowe published a famous panoramic image stitching paper in 2007. Since then, automatic panorama stitching technology has been widely adopted in many applications such as Google Street View, panorama photos on smartphones,and stitching software such as Photosynth and AutoStitch.

In this assignment, we will detect and match keypoints from multiple images to build a single panoramic image. This will involve several tasks:

- Use Harris corner detector to find keypoints.

- Build a descriptor to describe each point in an image.

Compare two sets of descriptors coming from two different images and find matching keypoints.- Given a list of matching keypoints, use least-squares method to find the affine transformation matrix that maps points in one image to another.

- Use RANSAC to give a more robust estimate of affine transformation matrix.

Given the transformation matrix, use it to transform the second image and overlay it on the first image, forming a panorama.- Implement a different descriptor (HOG descriptor) and get another stitching result.

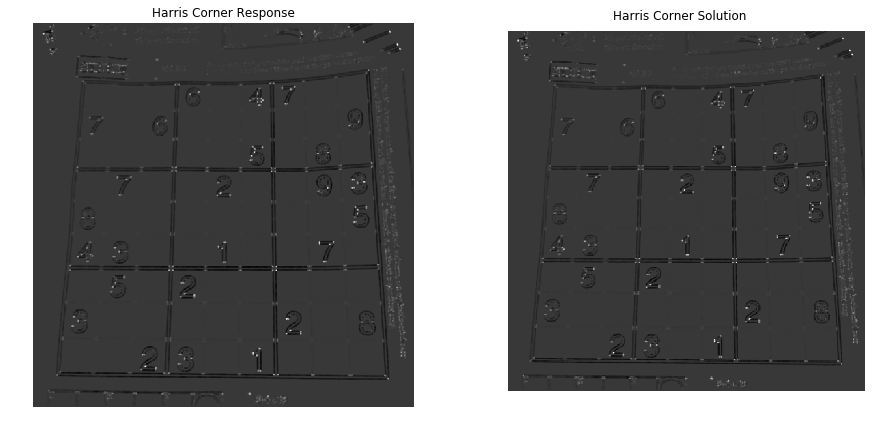

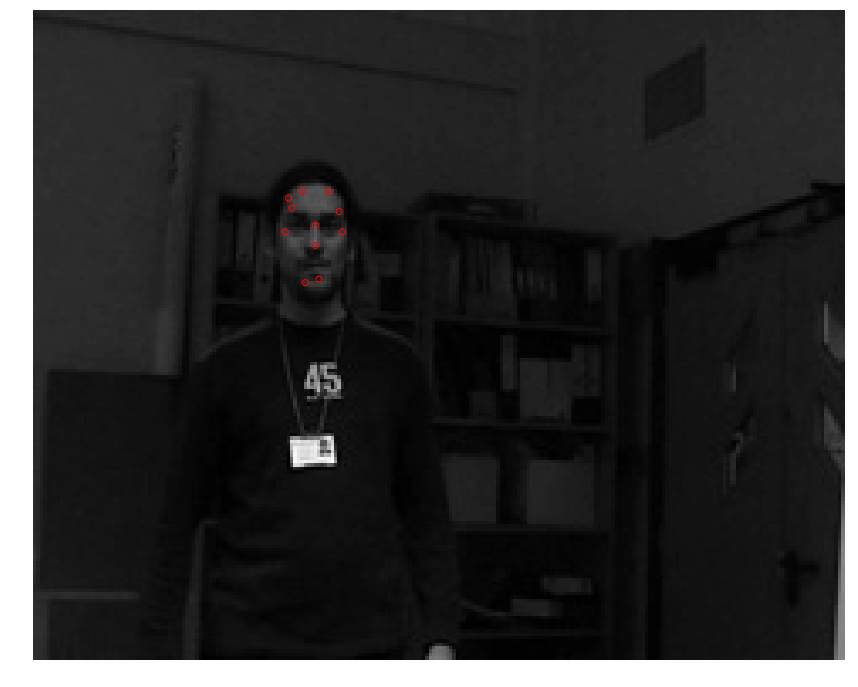

Harris Corner Detector

In this section, you are going to implement Harris corner detector for keypoint localization. Review the lecture slides on Harris corner detector to understand how it works. The Harris detection algorithm can be divide into the following steps:

- Compute and derivatives of an image

- Compute products of derivatives () at each pixel

- Compute matrix M at each pixel, where

- Compute corner response at each pixel

- Output corner response map

Step 1 is already done for you in the function harris_corners in panorama.py. Complete the function implementation and run the code below.

-Hint: You may use the function

scipy.ndimage.filters.convolve, which is already imported inpanoramy.py

关于Harris角点检测,这里有篇博客总结的很好。Harris角点

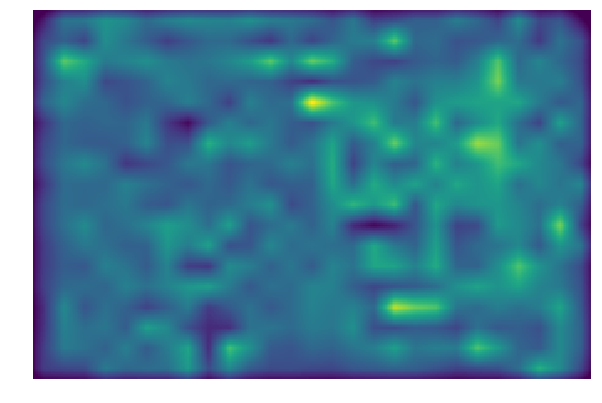

1 | from panorama import harris_corners |

2 | |

3 | img = imread('sudoku.png', as_grey=True) |

4 | |

5 | # Compute Harris corner response |

6 | response = harris_corners(img) |

7 | |

8 | # Display corner response |

9 | plt.subplot(1,2,1) |

10 | plt.imshow(response) |

11 | plt.axis('off') |

12 | plt.title('Harris Corner Response') |

13 | |

14 | plt.subplot(1,2,2) |

15 | plt.imshow(imread('solution_harris.png', as_grey=True)) |

16 | plt.axis('off') |

17 | plt.title('Harris Corner Solution') |

18 | |

19 | plt.show() |

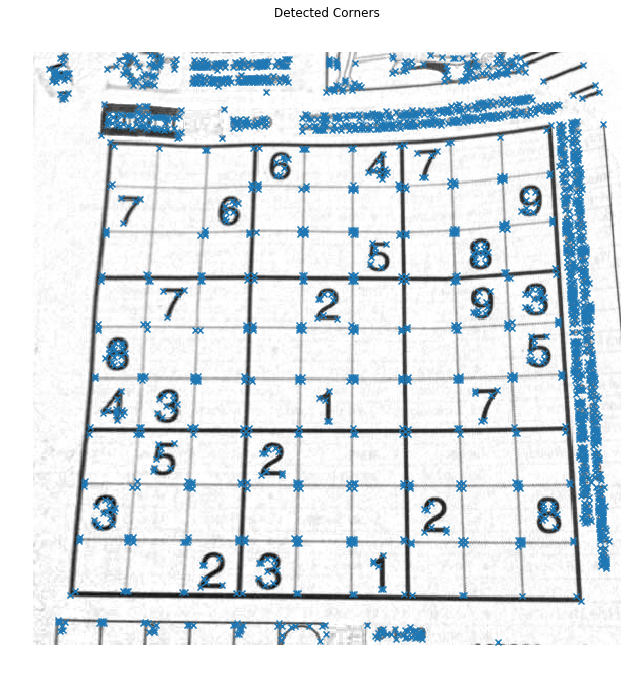

Once you implement the Harris detector correctly, you will be able to see small bright blobs around the corners of the sudoku grids and letters in the output corner response image. The function corner_peaks from skimage.feature performs non-maximum suppression to take local maxima of the response map and localize keypoints.

对响应输出进行非极大抑制。

1 | # Perform non-maximum suppression in response map |

2 | # and output corner coordiantes |

3 | corners = corner_peaks(response, threshold_rel=0.01) |

4 | |

5 | # Display detected corners |

6 | plt.imshow(img) |

7 | plt.scatter(corners[:,1], corners[:,0], marker='x') |

8 | plt.axis('off') |

9 | plt.title('Detected Corners') |

10 | plt.show() |

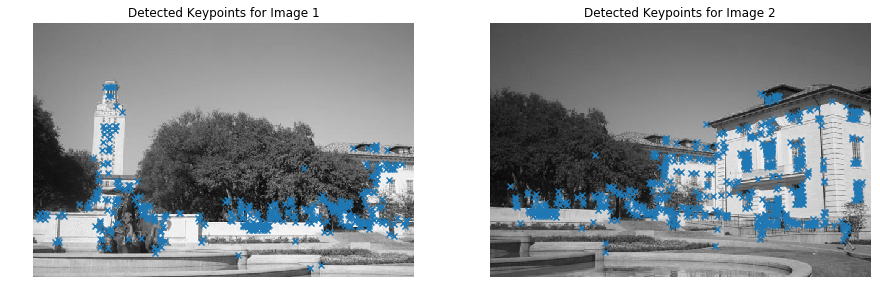

Describing and Matching Keypoints

We are now able to localize keypoints in two images by running the Harris corner detector independently on them. Next question is, how do we determine which pair of keypoints come from corresponding locations in those two images? In order to match the detected keypoints, we must come up with a way to describe the keypoints based on their local appearance. Generally, each region around detected keypoint locations is converted into a fixed-size vectors called descriptors.

Creating Descriptors

In this section, you are going to implement a simple_descriptor; each keypoint is described by normalized intensity in a small patch around it.

为了进行两幅图像中的关键点的点与点之间的匹配,需要对关键点进行描述。

此时,提出描述符对关键点的局部特征进行描述。

一般来说,描述符就是将检测到的关键点的附近区域转换成固定大小的向量。

1 | from panorama import harris_corners |

2 | |

3 | img1 = imread('uttower1.jpg', as_grey=True) |

4 | img2 = imread('uttower2.jpg', as_grey=True) |

5 | |

6 | # Detect keypoints in two images |

7 | keypoints1 = corner_peaks(harris_corners(img1, window_size=3), |

8 | threshold_rel=0.05, |

9 | exclude_border=8) |

10 | keypoints2 = corner_peaks(harris_corners(img2, window_size=3), |

11 | threshold_rel=0.05, |

12 | exclude_border=8) |

13 | |

14 | # Display detected keypoints |

15 | plt.subplot(1,2,1) |

16 | plt.imshow(img1) |

17 | plt.scatter(keypoints1[:,1], keypoints1[:,0], marker='x') |

18 | plt.axis('off') |

19 | plt.title('Detected Keypoints for Image 1') |

20 | |

21 | plt.subplot(1,2,2) |

22 | plt.imshow(img2) |

23 | plt.scatter(keypoints2[:,1], keypoints2[:,0], marker='x') |

24 | plt.axis('off') |

25 | plt.title('Detected Keypoints for Image 2') |

26 | plt.show() |

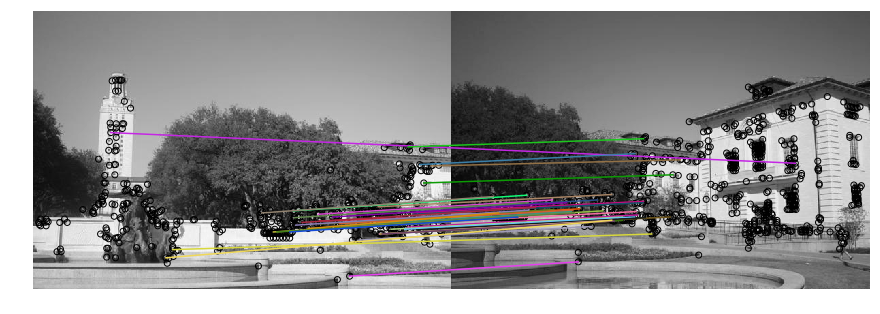

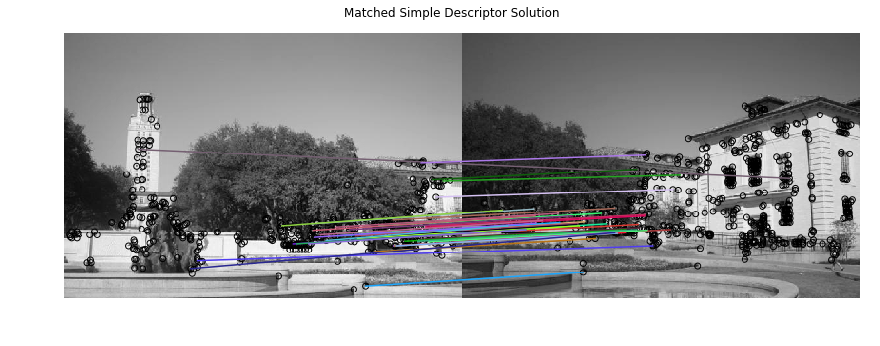

Matching Descriptors

Then, implement match_descriptors function to find good matches in two sets of descriptors. First, calculate Euclidean distance between all pairs of descriptors from image 1 and image 2. Then use this to determine if there is a good match: if the distance to the closest vector is significantly (by a factor which is given) smaller than the distance to the second-closest, we call it a match. The output of the function is an array where each row holds the indices of one pair of matching descriptors.

- 使用标准化的密度来作为描述子

- 使用欧几里得距离来对描述子进行匹配,当最短距离与第二短距离的比值小于阈值,则判定为匹配

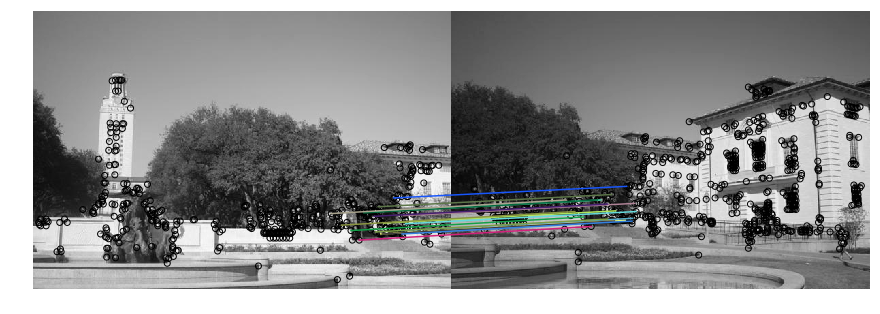

1 | from panorama import simple_descriptor, match_descriptors, describe_keypoints |

2 | from utils import plot_matches |

3 | |

4 | patch_size = 5 |

5 | |

6 | # Extract features from the corners |

7 | desc1 = describe_keypoints(img1, keypoints1, |

8 | desc_func=simple_descriptor, |

9 | patch_size=patch_size) |

10 | desc2 = describe_keypoints(img2, keypoints2, |

11 | desc_func=simple_descriptor, |

12 | patch_size=patch_size) |

13 | |

14 | # Match descriptors in image1 to those in image2 |

15 | matches = match_descriptors(desc1, desc2, 0.7) |

16 | |

17 | # Plot matches |

18 | fig, ax = plt.subplots(1, 1, figsize=(15, 12)) |

19 | ax.axis('off') |

20 | plot_matches(ax, img1, img2, keypoints1, keypoints2, matches) |

21 | plt.show() |

22 | plt.imshow(imread('solution_simple_descriptor.png')) |

23 | plt.axis('off') |

24 | plt.title('Matched Simple Descriptor Solution') |

25 | plt.show() |

Transformation Estimation

We now have a list of matched keypoints across the two images. We will use this to find a transformation matrix that maps points in the second image to the corresponding coordinates in the first image. In other words, if the point in image 1 matches with in image 2, we need to find an affine transformation matrix H such that

\tilde {p_2} H = \tilde{p_1}$$ where $\tilde {p_1}, \tilde{p_2}$ are homogenous coordinates of $p_1$ and $p_2$.

Note that it may be impossible to find the transformation that maps every point in image 2 exactly to the corresponding point in image 1. However, we can estimate the transformation matrix with least squares. Given N matched keypoint pairs, let and be matrices whose rows are homogenous coordinates of corresponding keypoints in image 1 and image 2 respectively. Then, we can estimate H by solving the least squares problem

Implement fit_affine_matrix in panorama.py

-Hint: read the documentation about np.linalg.lstsq

第三步就是要计算图2到图1的仿射变换矩阵。

由于匹配点对众多,这里采用最小二乘法估计变换矩阵。

1 | from panorama import fit_affine_matrix |

2 | |

3 | # Sanity check for fit_affine_matrix |

4 | |

5 | # Test inputs |

6 | a = np.array([[0.5, 0.1], [0.4, 0.2], [0.8, 0.2]]) |

7 | b = np.array([[0.3, -0.2], [-0.4, -0.9], [0.1, 0.1]]) |

8 | |

9 | H = fit_affine_matrix(b, a) |

10 | |

11 | # Target output |

12 | sol = np.array( |

13 | [[1.25, 2.5, 0.0], |

14 | [-5.75, -4.5, 0.0], |

15 | [0.25, -1.0, 1.0]] |

16 | ) |

17 | |

18 | error = np.sum((H - sol) ** 2) |

19 | |

20 | if error < 1e-20: |

21 | print('Implementation correct!') |

22 | else: |

23 | print('There is something wrong.') |

After checking that your fit_affine_matrix function is running correctly, run the following code to apply it to images.

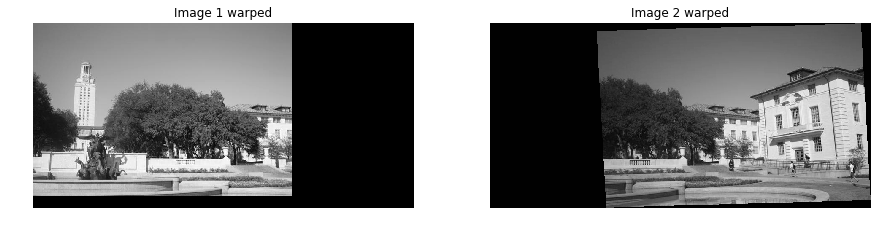

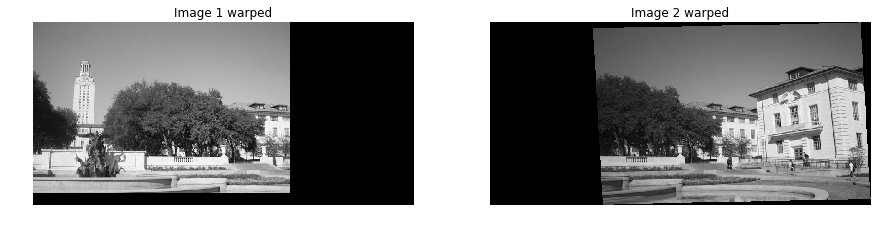

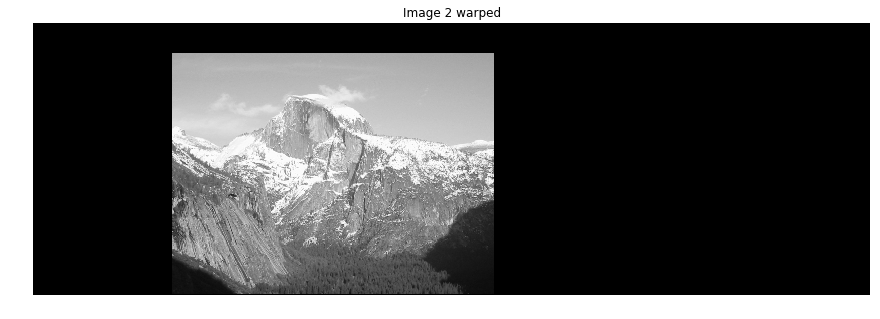

Images will be warped and image 2 will be mapped to image 1. Then, the two images are merged to get a panorama. Your panorama may not look good at this point, but we will later use other techniques to get a better result.

1 | from utils import get_output_space, warp_image |

2 | |

3 | # Extract matched keypoints |

4 | p1 = keypoints1[matches[:,0]] |

5 | p2 = keypoints2[matches[:,1]] |

6 | |

7 | # Find affine transformation matrix H that maps p2 to p1 |

8 | H = fit_affine_matrix(p1, p2) |

9 | |

10 | output_shape, offset = get_output_space(img1, [img2], [H]) |

11 | print("Output shape:", output_shape) |

12 | print("Offset:", offset) |

13 | |

14 | |

15 | # Warp images into output sapce |

16 | img1_warped = warp_image(img1, np.eye(3), output_shape, offset) |

17 | img1_mask = (img1_warped != -1) # Mask == 1 inside the image |

18 | img1_warped[~img1_mask] = 0 # Return background values to 0 |

19 | |

20 | img2_warped = warp_image(img2, H, output_shape, offset) |

21 | img2_mask = (img2_warped != -1) # Mask == 1 inside the image |

22 | img2_warped[~img2_mask] = 0 # Return background values to 0 |

23 | |

24 | # Plot warped images |

25 | plt.subplot(1,2,1) |

26 | plt.imshow(img1_warped) |

27 | plt.title('Image 1 warped') |

28 | plt.axis('off') |

29 | |

30 | plt.subplot(1,2,2) |

31 | plt.imshow(img2_warped) |

32 | plt.title('Image 2 warped') |

33 | plt.axis('off') |

34 | |

35 | plt.show() |

1 | merged = img1_warped + img2_warped |

2 | |

3 | # Track the overlap by adding the masks together |

4 | overlap = (img1_mask * 1.0 + # Multiply by 1.0 for bool -> float conversion |

5 | img2_mask) |

6 | |

7 | # Normalize through division by `overlap` - but ensure the minimum is 1 |

8 | normalized = merged / np.maximum(overlap, 1) |

9 | plt.imshow(normalized) |

10 | plt.axis('off') |

11 | plt.show() |

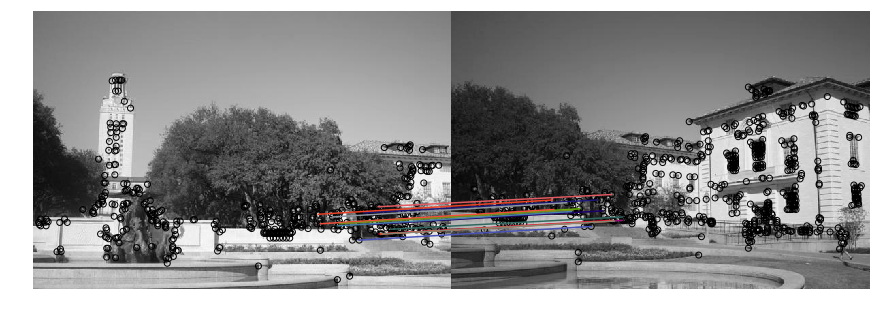

RANSAC

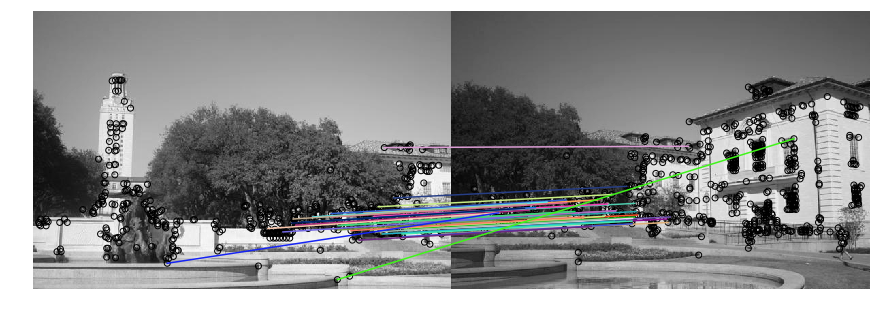

Rather than directly feeding all our keypoint matches into fit_affine_matrix function, we can instead use RANSAC (“RANdom SAmple Consensus”) to select only “inliers” to use to compute the transformation matrix.

The steps of RANSAC are:

- Select random set of matches

- Compute affine transformation matrix

- Find inliers using the given threshold

- Repeat and keep the largest set of inliers

- Re-compute least-squares estimate on all of the inliers

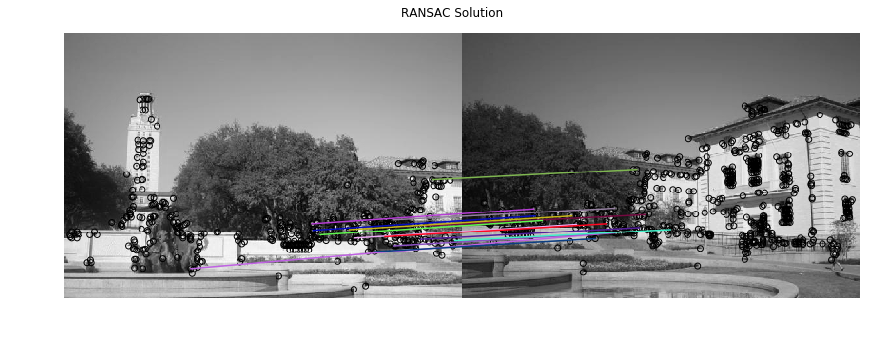

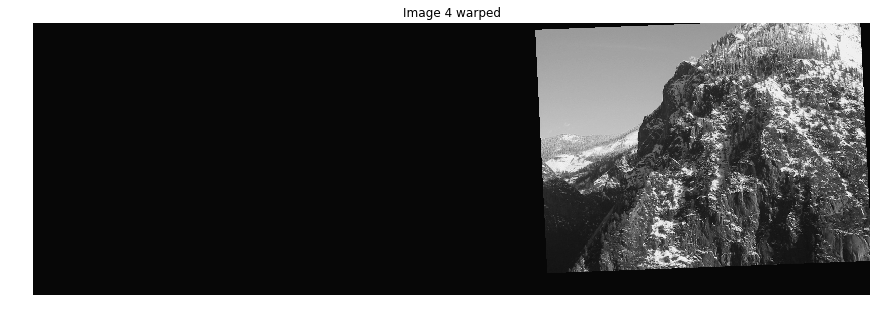

Implement ransac in panorama.py, run through the following code to get a panorama. You can see the difference from the result we get without RANSAC.

1 | from panorama import ransac |

2 | H, robust_matches = ransac(keypoints1, keypoints2, matches, threshold=1) |

3 | |

4 | # Visualize robust matches |

5 | fig, ax = plt.subplots(1, 1, figsize=(15, 12)) |

6 | plot_matches(ax, img1, img2, keypoints1, keypoints2, robust_matches) |

7 | plt.axis('off') |

8 | plt.show() |

9 | |

10 | plt.imshow(imread('solution_ransac.png')) |

11 | plt.axis('off') |

12 | plt.title('RANSAC Solution') |

13 | plt.show() |

1 | output_shape, offset = get_output_space(img1, [img2], [H]) |

2 | |

3 | # Warp images into output sapce |

4 | img1_warped = warp_image(img1, np.eye(3), output_shape, offset) |

5 | img1_mask = (img1_warped != -1) # Mask == 1 inside the image |

6 | img1_warped[~img1_mask] = 0 # Return background values to 0 |

7 | |

8 | img2_warped = warp_image(img2, H, output_shape, offset) |

9 | img2_mask = (img2_warped != -1) # Mask == 1 inside the image |

10 | img2_warped[~img2_mask] = 0 # Return background values to 0 |

11 | |

12 | # Plot warped images |

13 | plt.subplot(1,2,1) |

14 | plt.imshow(img1_warped) |

15 | plt.title('Image 1 warped') |

16 | plt.axis('off') |

17 | |

18 | plt.subplot(1,2,2) |

19 | plt.imshow(img2_warped) |

20 | plt.title('Image 2 warped') |

21 | plt.axis('off') |

22 | |

23 | plt.show() |

1 | merged = img1_warped + img2_warped |

2 | |

3 | # Track the overlap by adding the masks together |

4 | overlap = (img1_mask * 1.0 + # Multiply by 1.0 for bool -> float conversion |

5 | img2_mask) |

6 | |

7 | # Normalize through division by `overlap` - but ensure the minimum is 1 |

8 | normalized = merged / np.maximum(overlap, 1) |

9 | plt.imshow(normalized) |

10 | plt.axis('off') |

11 | plt.show() |

12 | |

13 | plt.imshow(imread('solution_ransac_panorama.png')) |

14 | plt.axis('off') |

15 | plt.title('RANSAC Panorama Solution') |

16 | plt.show() |

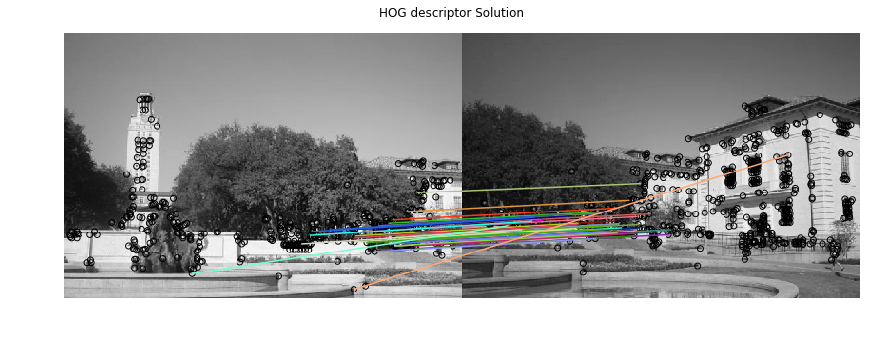

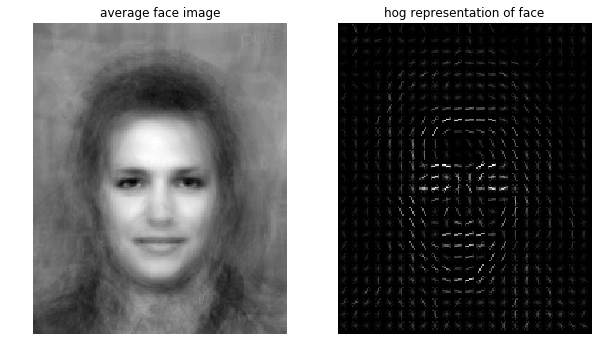

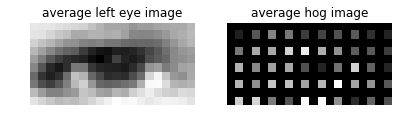

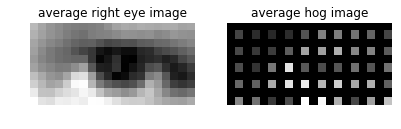

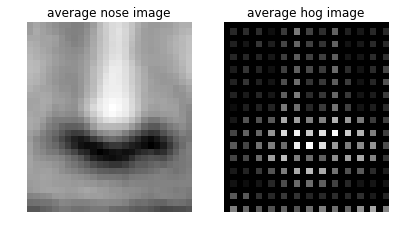

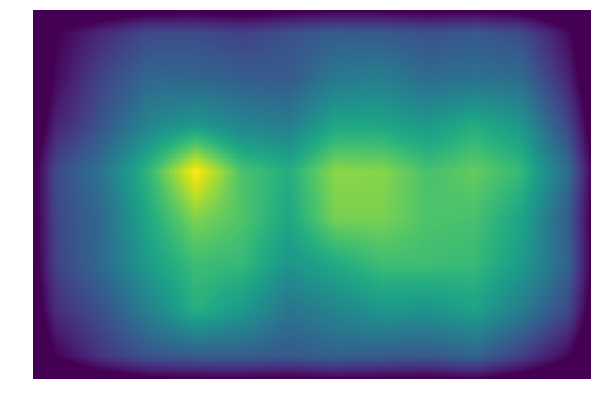

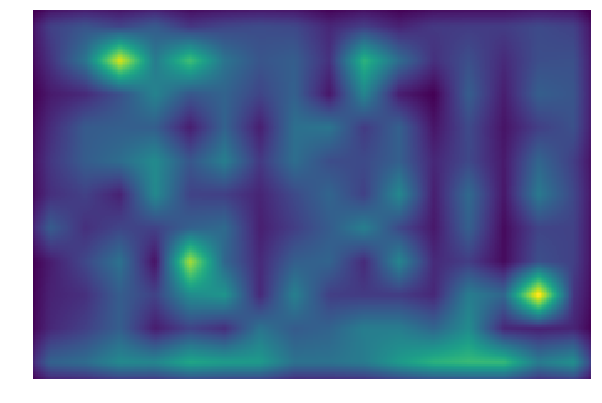

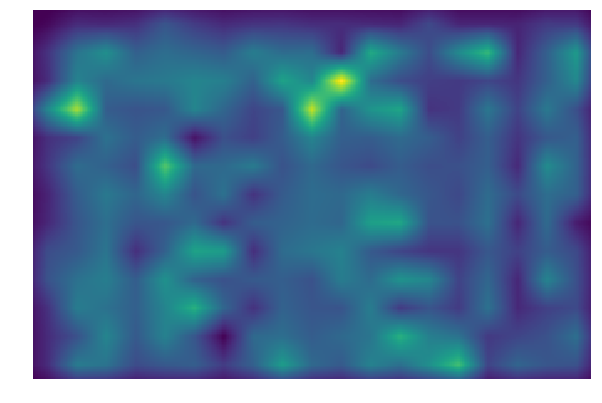

Histogram of Oriented Gradients (HOG)

In the above code, you are using the simple_descriptor, and in this section, you are going to implement a simplified version of HOG descriptor.

HOG stands for Histogram of Oriented Gradients. In HOG descriptor, the distribution ( histograms ) of directions of gradients ( oriented gradients ) are used as features. Gradients ( x and y derivatives ) of an image are useful because the magnitude of gradients is large around edges and corners ( regions of abrupt intensity changes ) and we know that edges and corners pack in a lot more information about object shape than flat regions.

The steps of HOG are:

- compute the gradient image in and

Use the sobel filter provided by skimage.filters- compute gradient histograms

Divide image into cells, and calculate histogram of gradient in each cell.- normalize across block

Normalize the histogram so that they- flattening block into a feature vector

Implement hog_descriptor in panorama.py, and run through the following code to get a panorama image.

关于HOG特征的算法流程,可以阅读此文

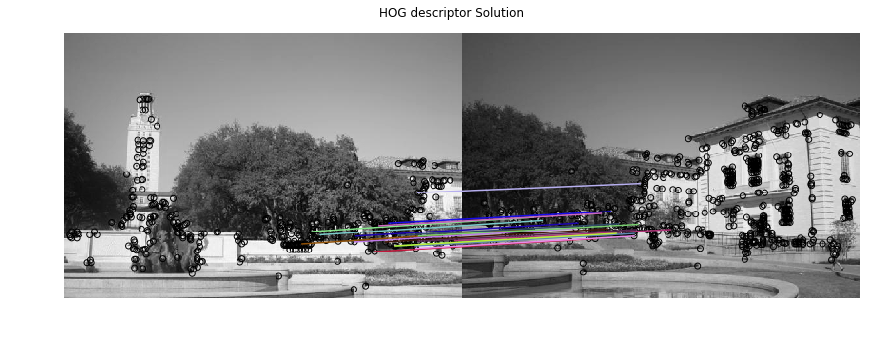

1 | from panorama import hog_descriptor |

2 | |

3 | img1 = imread('uttower1.jpg', as_grey=True) |

4 | img2 = imread('uttower2.jpg', as_grey=True) |

5 | |

6 | # Detect keypoints in both images |

7 | keypoints1 = corner_peaks(harris_corners(img1, window_size=3), |

8 | threshold_rel=0.05, |

9 | exclude_border=8) |

10 | keypoints2 = corner_peaks(harris_corners(img2, window_size=3), |

11 | threshold_rel=0.05, |

12 | exclude_border=8) |

1 | # Extract features from the corners |

2 | desc1 = describe_keypoints(img1, keypoints1, |

3 | desc_func=hog_descriptor, |

4 | patch_size=16) |

5 | desc2 = describe_keypoints(img2, keypoints2, |

6 | desc_func=hog_descriptor, |

7 | patch_size=16) |

8 | |

9 | # Match descriptors in image1 to those in image2 |

10 | matches = match_descriptors(desc1, desc2, 0.7) |

11 | |

12 | # Plot matches |

13 | fig, ax = plt.subplots(1, 1, figsize=(15, 12)) |

14 | ax.axis('off') |

15 | plot_matches(ax, img1, img2, keypoints1, keypoints2, matches) |

16 | plt.show() |

17 | plt.imshow(imread('solution_hog.png')) |

18 | plt.axis('off') |

19 | plt.title('HOG descriptor Solution') |

20 | plt.show() |

1 | from panorama import ransac |

2 | H, robust_matches = ransac(keypoints1, keypoints2, matches, threshold=1) |

3 | |

4 | # Plot matches |

5 | fig, ax = plt.subplots(1, 1, figsize=(15, 12)) |

6 | plot_matches(ax, img1, img2, keypoints1, keypoints2, robust_matches) |

7 | plt.axis('off') |

8 | plt.show() |

9 | |

10 | plt.imshow(imread('solution_hog_ransac.png')) |

11 | plt.axis('off') |

12 | plt.title('HOG descriptor Solution') |

13 | plt.show() |

1 | output_shape, offset = get_output_space(img1, [img2], [H]) |

2 | |

3 | # Warp images into output sapce |

4 | img1_warped = warp_image(img1, np.eye(3), output_shape, offset) |

5 | img1_mask = (img1_warped != -1) # Mask == 1 inside the image |

6 | img1_warped[~img1_mask] = 0 # Return background values to 0 |

7 | |

8 | img2_warped = warp_image(img2, H, output_shape, offset) |

9 | img2_mask = (img2_warped != -1) # Mask == 1 inside the image |

10 | img2_warped[~img2_mask] = 0 # Return background values to 0 |

11 | |

12 | # Plot warped images |

13 | plt.subplot(1,2,1) |

14 | plt.imshow(img1_warped) |

15 | plt.title('Image 1 warped') |

16 | plt.axis('off') |

17 | |

18 | plt.subplot(1,2,2) |

19 | plt.imshow(img2_warped) |

20 | plt.title('Image 2 warped') |

21 | plt.axis('off') |

22 | |

23 | plt.show() |

1 | merged = img1_warped + img2_warped |

2 | |

3 | # merge之后重叠部分亮度上来了,需要通过归一化回复原来亮度 |

4 | # Track the overlap by adding the masks together |

5 | overlap = (img1_mask * 1.0 + # Multiply by 1.0 for bool -> float conversion |

6 | img2_mask) |

7 | |

8 | # Normalize through division by `overlap` - but ensure the minimum is 1 |

9 | normalized = merged / np.maximum(overlap, 1) |

10 | plt.imshow(normalized) |

11 | plt.axis('off') |

12 | plt.show() |

13 | |

14 | plt.imshow(imread('solution_hog_panorama.png')) |

15 | plt.axis('off') |

16 | plt.title('HOG Descriptor Panorama Solution') |

17 | plt.show() |

实现了HOG特征描述符之后,可以替代掉之前的归一化密度作为特征的匹配。效果不错。

Extra Credit: Better Image Merging

You will notice the blurry region and unpleasant lines in the middle of the final panoramic image. In the cell below, come up with a better merging scheme to make the panorama look more natural. Be creative!

怎么消除拼接处的两条拼接痕迹呢?

加权融合

博客上的处理思路是加权融合,在重叠部分由前一幅图像慢慢过渡到第二幅图像,即将图像的重叠区域的像素值按一定的权值相加合成新的图像。

我的理解就是两块重叠的区域,从左向右每个像素由图1和图2融合,最开始图1的权重最大,图2的权重最小,向右融合过程中图1权重逐渐减小,图2权重逐渐增大。

融合的公式可以简单理解成:

而权重的值则与像素点在重叠区域的位置相关,可以定义成:

论文《Automatic Panoramic Image Stitching using Invariant Features》论文针对拼接步骤,提出了三点:

- 自动拼接校直 Automatic Panorama Straightening

校直是用BA做的相机参数的估计,用来解决拼接过程中的图像的旋转

- 增益补偿 Gain Compensation

增益补偿是用过使用最小二乘法计算每个拼接块的增益,用来均衡整幅图像中各个拼接块的亮度不均问题

- 多频带混合 Multi-Band Blending

1 | # Modify the code below |

2 | |

3 | ### YOUR CODE HERE |

4 | |

5 | # # 拼接方法一:题目原有方法,两幅图叠加后在对重叠区域和非重叠区域做亮度调整。 |

6 | merged = img1_warped + img2_warped |

7 | overlap = (img1_mask * 1.0 + img2_mask) |

8 | output = merged / np.maximum(overlap, 1) |

9 | |

10 | plt.imshow(output) |

11 | plt.axis('off') |

12 | plt.title('Way Origin') |

13 | plt.show() |

14 | |

15 | # 拼接方法二:加权融合 |

16 | # 先把两幅图叠加在一起 |

17 | merged = img1_warped + img2_warped |

18 | # overlap = (img1_mask * 1.0 + img2_mask) |

19 | # output = merged / np.maximum(overlap, 1) |

20 | # 1. 找到重叠区域在warped图像中的左边界和右边界,可以根据已知的mask图像进行判断 |

21 | # 1.1 重叠区域左边界就是img2_mask中第一个不全为零的列 |

22 | for col in range(img2_warped.shape[1]): |

23 | if not np.all(img2_mask[:, col] == False): |

24 | break |

25 | regionLeft = col |

26 | # 1.2 重叠区域右边界就是img1_mask中有值区域的右边,其实就是img1的宽所在的列 |

27 | regionRight = img1.shape[1] |

28 | # 1.3 区域宽度 |

29 | regionWidth = regionRight - regionLeft + 1 |

30 | |

31 | # 2. 遍历区域内像素点进行融合 |

32 | for col in range(regionLeft, regionRight+1): |

33 | for row in range(img2_warped.shape[0]): |

34 | # 2.1 计算α |

35 | alpha = (col - regionLeft) / (regionWidth) |

36 | alpha = 1 - alpha |

37 | # 2.2 处理区域内的重叠点 |

38 | if img1_mask[row,col] and img2_mask[row,col]: |

39 | merged[row,col] = alpha * img1_warped[row,col] + (1 - alpha) * img2_warped[row,col] |

40 | |

41 | output = merged |

42 | |

43 | plt.imshow(output) |

44 | plt.axis('off') |

45 | plt.title('Way 1: weighted fusion') |

46 | plt.show() |

47 | ### END YOUR CODE |

OpenPano

Github上宇哥开源的拼接库OpenPano

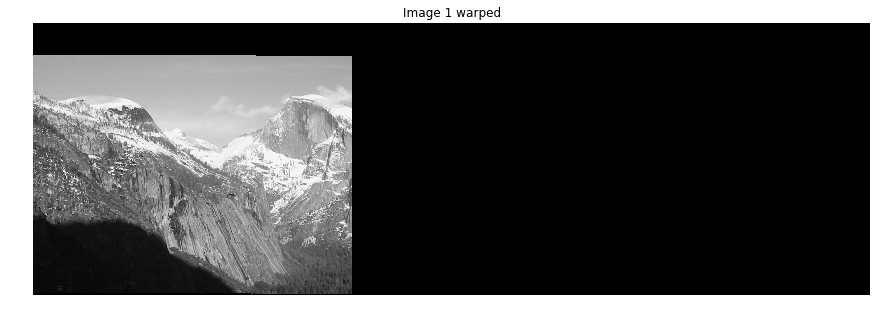

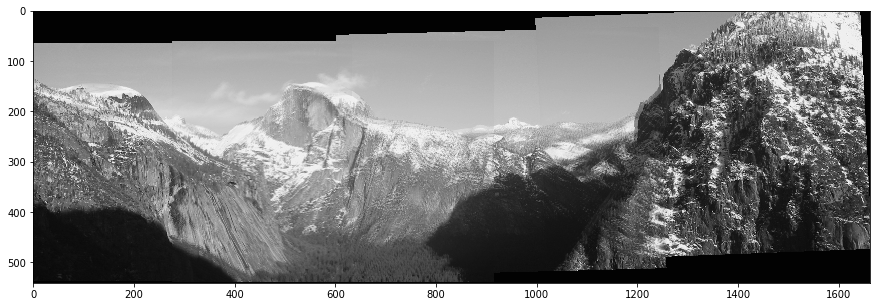

Extra Credit: Stitching Multiple Images

Work in the cell below to complete the code to stitch an ordered chain of images.

Given a sequence of m images , take every neighboring pair of images and compute the transformation matrix which converts points from the coordinate frame of to the frame of . Then, select a reference image , which is in the middle of the chain. We want our final panorama image to be in the coordinate frame of . So, for each that is not the reference image, we need a transformation matrix that will convert points in frame to frame .

-Hint:

- If you are confused, you may want to review the Linear Algebra slides on how to combine the effects of multiple transformation matrices.

- The inverse of transformation matrix has the reverse effect. Please use numpy.linalg.inv function whenever you want to compute matrix inverse.

1 | img1 = imread('yosemite1.jpg', as_grey=True) |

2 | img2 = imread('yosemite2.jpg', as_grey=True) |

3 | img3 = imread('yosemite3.jpg', as_grey=True) |

4 | img4 = imread('yosemite4.jpg', as_grey=True) |

5 | |

6 | # Detect keypoints in each image |

7 | keypoints1 = corner_peaks(harris_corners(img1, window_size=3), |

8 | threshold_rel=0.05, |

9 | exclude_border=8) |

10 | keypoints2 = corner_peaks(harris_corners(img2, window_size=3), |

11 | threshold_rel=0.05, |

12 | exclude_border=8) |

13 | keypoints3 = corner_peaks(harris_corners(img3, window_size=3), |

14 | threshold_rel=0.05, |

15 | exclude_border=8) |

16 | keypoints4 = corner_peaks(harris_corners(img4, window_size=3), |

17 | threshold_rel=0.05, |

18 | exclude_border=8) |

19 | |

20 | # Describe keypoints |

21 | desc1 = describe_keypoints(img1, keypoints1, |

22 | desc_func=simple_descriptor, |

23 | patch_size=patch_size) |

24 | desc2 = describe_keypoints(img2, keypoints2, |

25 | desc_func=simple_descriptor, |

26 | patch_size=patch_size) |

27 | desc3 = describe_keypoints(img3, keypoints3, |

28 | desc_func=simple_descriptor, |

29 | patch_size=patch_size) |

30 | desc4 = describe_keypoints(img4, keypoints4, |

31 | desc_func=simple_descriptor, |

32 | patch_size=patch_size) |

33 | |

34 | # Match keypoints in neighboring images |

35 | matches12 = match_descriptors(desc1, desc2, 0.7) |

36 | matches23 = match_descriptors(desc2, desc3, 0.7) |

37 | matches34 = match_descriptors(desc3, desc4, 0.7) |

38 | |

39 | ### YOUR CODE HERE |

40 | # RANSAC 估计 仿射变换矩阵 |

41 | H12, robust_matches12 = ransac(keypoints1, keypoints2, matches12, threshold=1) |

42 | H23, robust_matches23 = ransac(keypoints2, keypoints3, matches23, threshold=1) |

43 | H34, robust_matches34 = ransac(keypoints3, keypoints4, matches34, threshold=1) |

44 | |

45 | # 生成outspace大背景 |

46 | # 注意utils.py中的get_output_space用法,参数2:imgs与参数3:transforms要对应 |

47 | # 注意选取referImg 参考图像,这里选择图2 |

48 | output_shape, offset = get_output_space(img2, [img1, img3, img4], [np.linalg.inv(H12), H23, np.dot(H23,H34)]) |

49 | |

50 | # 将图像放入大背景中 |

51 | # Warp images into output sapce |

52 | img1_warped = warp_image(img1, np.linalg.inv(H12), output_shape, offset) |

53 | img1_mask = (img1_warped != -1) # Mask == 1 inside the image |

54 | img1_warped[~img1_mask] = 0 # Return background values to 0 |

55 | |

56 | img2_warped = warp_image(img2, np.eye(3), output_shape, offset) |

57 | img2_mask = (img2_warped != -1) # Mask == 1 inside the image |

58 | img2_warped[~img2_mask] = 0 # Return background values to 0 |

59 | |

60 | img3_warped = warp_image(img3, H23, output_shape, offset) |

61 | img3_mask = (img3_warped != -1) # Mask == 1 inside the image |

62 | img3_warped[~img3_mask] = 0 # Return background values to 0 |

63 | |

64 | img4_warped = warp_image(img4, np.dot(H23,H34), output_shape, offset) |

65 | img4_mask = (img4_warped != -1) # Mask == 1 inside the image |

66 | img4_warped[~img4_mask] = 0 # Return background values to 0 |

67 | |

68 | |

69 | plt.imshow(img1_warped) |

70 | plt.axis('off') |

71 | plt.title('Image 1 warped') |

72 | plt.show() |

73 | |

74 | plt.imshow(img2_warped) |

75 | plt.axis('off') |

76 | plt.title('Image 2 warped') |

77 | plt.show() |

78 | |

79 | plt.imshow(img3_warped) |

80 | plt.axis('off') |

81 | plt.title('Image 3 warped') |

82 | plt.show() |

83 | |

84 | plt.imshow(img4_warped) |

85 | plt.axis('off') |

86 | plt.title('Image 4 warped') |

87 | plt.show() |

88 | |

89 | ### END YOUR CODE |

1 | # 进行拼接,拼接方式一 |

2 | merged = img1_warped + img2_warped + img3_warped + img4_warped |

3 | |

4 | # Track the overlap by adding the masks together |

5 | overlap = (img2_mask * 1.0 + # Multiply by 1.0 for bool -> float conversion |

6 | img1_mask + img3_mask + img4_mask) |

7 | |

8 | # Normalize through division by `overlap` - but ensure the minimum is 1 |

9 | normalized = merged / np.maximum(overlap, 1) |

10 | |

11 | plt.imshow(normalized) |

12 | plt.show() |

多幅图的拼接就可以看出来简单方式的问题了:

- 拼接痕迹的存在,可以使用加权平均,

- 各个拼接块亮度不均匀,需要做增益补偿,

- 存在未知的图像的旋转,可能是由于拍摄时相机发生了位姿的变动,需要做优化处理(校直),

- 重叠部分的拼接并不能直接实现所有像素的对应叠加,可以看出来存在模糊的情况,可以使用多频带混合的方式去做处理。

Resizing-SeamCarving

复现Seam Carving(接缝拼接)算法,实现基于内容感知的图像智能缩放,并且实现图像中目标的移除,了解图像能量图、动态编程。

@Reference

CS131: Computer Vision Foundations and Applications

The material presented here is inspired from:

The whole seam carving process was covered in lecture 8, please refer to the slides for more details to the different concepts introduced here.

这份作业的主题是实现SeamCarving(接缝裁剪)的算法,即基于图像内容感知的图像缩放算法。

- 实现图像裁剪

- 实现图像扩大

- 其他实验

也可以参考这篇专栏讲了如何用Python实现Seam carving算法,原理讲解的挺清楚的。

如何用Python实现神奇切图算法Seam Carving ?

Sean Carving 的基本思路

- 计算图像能量图

- 寻找最小能量线

- 移除得到的最小能量线,让图片的宽度缩小一个像素

Seam carving is an algorithm for content-aware image resizing.

To understand all the concepts in this homework, make sure to read again the slides from lecture 8

http://vision.stanford.edu/teaching/cs131_fall1718/files/08_seam_carving.pdf

1 | from skimage import io, util |

2 | |

3 | # Load image |

4 | img = io.imread('imgs/broadway_tower.jpg') |

5 | img = util.img_as_float(img) |

6 | |

7 | plt.title('Original Image') |

8 | plt.imshow(img) |

9 | plt.show() |

Energy function

We will now implemented the energy_function to compute the energy of the image.

The energy at each pixel is the sum of:

- absolute value of the gradient in the direction

- absolute value of the gradient in the direction

The function should take around 0.01 to 0.1 seconds to compute.

图像能量图此处定义为图像每个像素的两方向梯度幅值之和,当然能量也可以是别的定义方式:梯度幅度、熵图、显著图等等。计算梯度要注意转换为灰度图再进行。

1 | from seam_carving import energy_function |

2 | |

3 | test_img = np.array([[1.0, 2.0, 1.5], |

4 | [3.0, 1.0, 2.0], |

5 | [4.0, 0.5, 3.0]]) |

6 | test_img = np.stack([test_img] * 3, axis=2) |

7 | assert test_img.shape == (3, 3, 3) |

8 | |

9 | # Compute energy function |

10 | test_energy = energy_function(test_img) |

11 | |

12 | solution_energy = np.array([[3.0, 1.25, 1.0], |

13 | [3.5, 1.25, 1.75], |

14 | [4.5, 1.0, 3.5]]) |

15 | |

16 | print("Image (channel 0):") |

17 | print(test_img[:, :, 0]) |

18 | |

19 | print("Energy:") |

20 | print(test_energy) |

21 | print("Solution energy:") |

22 | print(solution_energy) |

23 | |

24 | assert np.allclose(test_energy, solution_energy) |

1 | # Compute energy function |

2 | start = time() |

3 | energy = energy_function(img) |

4 | end = time() |

5 | |

6 | print("Computing energy function: %f seconds." % (end - start)) |

7 | |

8 | plt.title('Energy') |

9 | plt.axis('off') |

10 | plt.imshow(energy) |

11 | plt.show() |

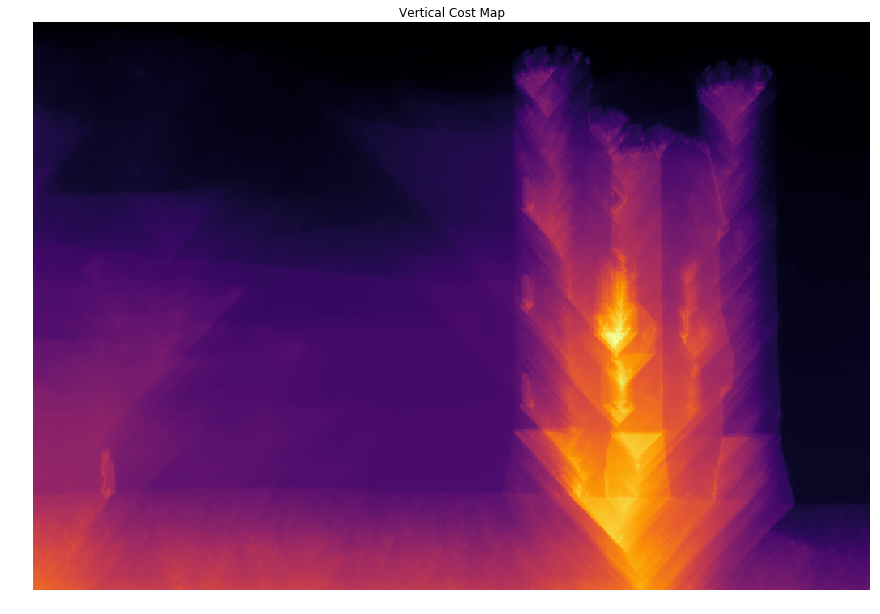

Compute cost

Now implement the function compute_cost.

Starting from the energy map, we’ll go from the first row of the image to the bottom and compute the minimal cost at each pixel.

We’ll use dynamic programming to compute the cost line by line starting from the first row.

The function should take around 0.05 seconds to complete.

此处要求使用动态规划计算整幅图的cost图,用于第三步寻找最小能量线。

此处动态规划的意思是从图像的最上面一行开始,计算能量的最小累加路线,cost图中每一行的每个像素从它八相邻的上一行的三个像素中取最小cost值的元素进行累加。

直到累加到最后一行,同时在计算过程中保留每个像素的累加路径,即像素的cost值是从上一行哪一个元素相加得到的(用-1,0,1表示上左,上中,上右)。

题目中要求只用一个循环实现,则需要用向量化的形式进行计算。

1 | from seam_carving import compute_cost |

2 | |

3 | # Let's first test with a small example |

4 | |

5 | test_energy = np.array([[1.0, 2.0, 1.5], |

6 | [3.0, 1.0, 2.0], |

7 | [4.0, 0.5, 3.0]]) |

8 | |

9 | solution_cost = np.array([[1.0, 2.0, 1.5], |

10 | [4.0, 2.0, 3.5], |

11 | [6.0, 2.5, 5.0]]) |

12 | |

13 | solution_paths = np.array([[ 0, 0, 0], |

14 | [ 0, -1, 0], |

15 | [ 1, 0, -1]]) |

16 | |

17 | # Vertical Cost Map |

18 | vcost, vpaths = compute_cost(_, test_energy, axis=1) # don't need the first argument for compute_cost |

19 | |

20 | print("Energy:") |

21 | print(test_energy) |

22 | |

23 | print("Cost:") |

24 | print(vcost) |

25 | print("Solution cost:") |

26 | print(solution_cost) |

27 | |

28 | print("Paths:") |

29 | print(vpaths) |

30 | print("Solution paths:") |

31 | print(solution_paths) |

1 | # Vertical Cost Map |

2 | start = time() |

3 | vcost, _ = compute_cost(_, energy, axis=1) # don't need the first argument for compute_cost |

4 | end = time() |

5 | |

6 | print("Computing vertical cost map: %f seconds." % (end - start)) |

7 | |

8 | plt.title('Vertical Cost Map') |

9 | plt.axis('off') |

10 | plt.imshow(vcost, cmap='inferno') |

11 | plt.show() |

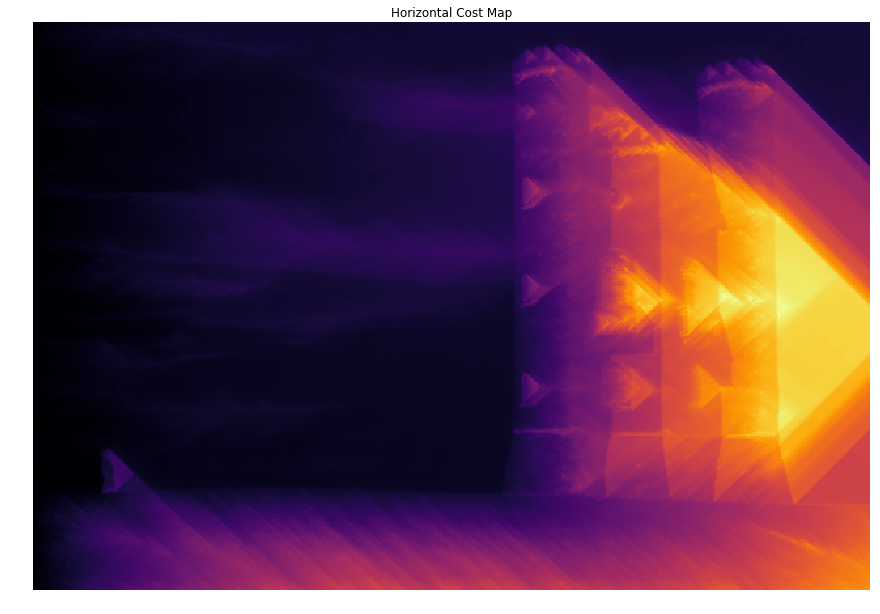

1 | # Horizontal Cost Map |

2 | start = time() |

3 | hcost, _ = compute_cost(_, energy, axis=0) |

4 | end = time() |

5 | |

6 | print("Computing horizontal cost map: %f seconds." % (end - start)) |

7 | |

8 | plt.title('Horizontal Cost Map') |

9 | plt.axis('off') |

10 | plt.imshow(hcost, cmap='inferno') |

11 | plt.show() |

Finding optimal seams

Using the cost maps we found above, we can determine the seam with the lowest energy in the image.

We can then remove this optimal seam, and repeat the process until we obtain a desired width.

由于上面计算能量cost图时,已经保存了每个像素点的叠加路径,因此可以在最后一行找到最小的cost,并从该点开始,向上溯源,找到一条最佳的接缝,即最小的能量线。

Backtrack seam

Implement function backtrack_seam

1 | from seam_carving import backtrack_seam |

2 | |

3 | # Let's first test with a small example |

4 | cost = np.array([[1.0, 2.0, 1.5], |

5 | [4.0, 2.0, 3.5], |

6 | [6.0, 2.5, 5.0]]) |

7 | |

8 | paths = np.array([[ 0, 0, 0], |

9 | [ 0, -1, 0], |

10 | [ 1, 0, -1]]) |

11 | |

12 | |

13 | # Vertical Backtracking |

14 | |

15 | end = np.argmin(cost[-1]) |

16 | seam_energy = cost[-1, end] |

17 | seam = backtrack_seam(vpaths, end) |

18 | |

19 | print('Seam Energy:', seam_energy) |

20 | print('Seam:', seam) |

21 | |

22 | assert seam_energy == 2.5 |

23 | assert np.allclose(seam, [0, 1, 1]) |

1 | vcost, vpaths = compute_cost(img, energy) |

2 | |

3 | # Vertical Backtracking |

4 | start = time() |

5 | end = np.argmin(vcost[-1]) |

6 | seam_energy = vcost[-1, end] |

7 | seam = backtrack_seam(vpaths, end) |

8 | end = time() |

9 | |

10 | print("Backtracking optimal seam: %f seconds." % (end - start)) |

11 | print('Seam Energy:', seam_energy) |

12 | |

13 | # Visualize seam |

14 | vseam = np.copy(img) |

15 | for row in range(vseam.shape[0]): |

16 | vseam[row, seam[row], :] = np.array([1.0, 0, 0]) |

17 | |

18 | plt.title('Vertical Seam') |

19 | plt.axis('off') |

20 | plt.imshow(vseam) |

21 | plt.show() |

到此,就实现了一条接缝的查找,接下来移除它就可以实现一个像素宽度的缩小。

In the image above, the optimal vertical seam (minimal cost) goes through the portion of sky without any cloud, which yields the lowest energy.

Reduce

We can now use the function backtrack and remove_seam iteratively to reduce the size of the image through seam carving.

Each reduce can take around 10 seconds to compute, depending on your implementation.

If it’s too long, try to vectorize your code in compute_cost to only use one loop.

1 | from seam_carving import reduce |

2 | |

3 | # Let's first test with a small example |

4 | test_img = np.arange(9, dtype=np.float64).reshape((3, 3)) |

5 | test_img = np.stack([test_img, test_img, test_img], axis=2) |

6 | assert test_img.shape == (3, 3, 3) |

7 | |

8 | cost = np.array([[1.0, 2.0, 1.5], |

9 | [4.0, 2.0, 3.5], |

10 | [6.0, 2.5, 5.0]]) |

11 | |

12 | paths = np.array([[ 0, 0, 0], |

13 | [ 0, -1, 0], |

14 | [ 1, 0, -1]]) |

15 | |

16 | # Reduce image width |

17 | W_new = 2 |

18 | |

19 | # We force the cost and paths to our values |

20 | out = reduce(test_img, W_new, cfunc=lambda x, y: (cost, paths)) |

21 | |

22 | print("Original image (channel 0):") |

23 | print(test_img[:, :, 0]) |

24 | print("Reduced image (channel 0): we see that seam [0, 4, 7] is removed") |

25 | print(out[:, :, 0]) |

26 | |

27 | assert np.allclose(out[:, :, 0], np.array([[1, 2], [3, 5], [6, 8]])) |

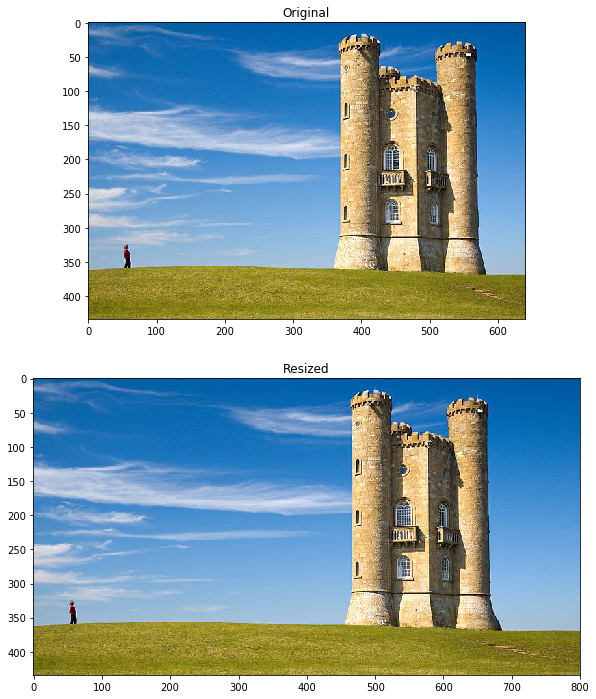

1 | # Reduce image width |

2 | H, W, _ = img.shape |

3 | W_new = 400 |

4 | |

5 | start = time() |

6 | out = reduce(img, W_new) |

7 | end = time() |

8 | |

9 | print("Reducing width from %d to %d: %f seconds." % (W, W_new, end - start)) |

10 | |

11 | plt.subplot(2, 1, 1) |

12 | plt.title('Original') |

13 | plt.imshow(img) |

14 | |

15 | plt.subplot(2, 1, 2) |

16 | plt.title('Resized') |

17 | plt.imshow(out) |

18 | |

19 | plt.show() |

裁剪部分我是按照每一行删除一个元素的方式去实现的。

能够达到题目要求的10s左右的时间要求。

We observe that resizing from width 640 to width 400 conserves almost all the important part of the image (the person and the castle), where a standard resizing would have compressed everything.

All the vertical seams removed avoid the person and the castle.

程序同样可以缩减高度,程序中对于另一个维度的裁剪做了判断,

只需要seam carving流程走之前旋转图像,走完流程之后再将裁剪宽度的图像旋转回来就成了裁剪高度了。

1 | # Reduce image height |

2 | H, W, _ = img.shape |

3 | H_new = 300 |

4 | |

5 | start = time() |

6 | out = reduce(img, H_new, axis=0) |

7 | end = time() |

8 | |

9 | print("Reducing height from %d to %d: %f seconds." % (H, H_new, end - start)) |

10 | |

11 | plt.subplot(1, 2, 1) |

12 | plt.title('Original') |

13 | plt.imshow(img) |

14 | |

15 | plt.subplot(1, 2, 2) |

16 | plt.title('Resized') |

17 | plt.imshow(out) |

18 | |

19 | plt.show() |

For reducing the height, we observe that the result does not look as nice.

The issue here is that the castle is on all the height of the image, so most horizontal seams will go through it.

Interestingly, we observe that most of the grass is not removed. This is because the grass has small variation between neighboring pixels (in a kind of noisy pattern) that make it high energy.

The seams removed go through the sky on the left, go under the castle to remove some grass and then back up in the low energy blue sky.

虽然实现了高度的裁剪,但是图像看起来怪怪的,这是可以从图像上做分析的。

城堡明显具有很强的能量,详见第一步能量图计算,因此裁剪高度时,每一条最小能量线都会越过它。

草坪看起来其实跟噪声一样,每个像素点与相邻像素都有或大或小的差异,因此梯度也是较大的,因此能量也较高,不会首先裁剪草坪而是更平滑的蓝天,

而城堡下面的草坪凹陷是因为城堡上方的蓝天已被裁剪光了,最小能量线无法从上方穿过时才从城堡下方的草坪开始裁剪的。

Image Enlarging

Enlarge naive

We now want to tackle the reverse problem of enlarging an image.

One naive way to approach the problem would be to duplicate the optimal seam iteratively until we reach the desired size.

图像扩大的一种方式是直接在最小能量线的地方复制它。

在enlarge_naive函数中实现它。

1 | from seam_carving import enlarge_naive |

2 | |

3 | # Let's first test with a small example |

4 | test_img = np.arange(9, dtype=np.float64).reshape((3, 3)) |

5 | test_img = np.stack([test_img, test_img, test_img], axis=2) |

6 | assert test_img.shape == (3, 3, 3) |

7 | |

8 | cost = np.array([[1.0, 2.0, 1.5], |

9 | [4.0, 2.0, 3.5], |

10 | [6.0, 2.5, 5.0]]) |

11 | |

12 | paths = np.array([[ 0, 0, 0], |

13 | [ 0, -1, 0], |

14 | [ 1, 0, -1]]) |

15 | |

16 | # Increase image width |

17 | W_new = 4 |

18 | |

19 | # We force the cost and paths to our values |

20 | out = enlarge_naive(test_img, W_new, cfunc=lambda x, y: (cost, paths)) |

21 | |

22 | print("Original image (channel 0):") |

23 | print(test_img[:, :, 0]) |

24 | print("Enlarged image (channel 0): we see that seam [0, 4, 7] is duplicated") |

25 | print(out[:, :, 0]) |

26 | |

27 | assert np.allclose(out[:, :, 0], np.array([[0, 0, 1, 2], [3, 4, 4, 5], [6, 7, 7, 8]])) |

1 | W_new = 800 |

2 | |

3 | # This is a naive implementation of image enlarging |

4 | # which iteratively computes energy function, finds optimal seam |

5 | # and duplicates it. |

6 | # This process will a stretching artifact by choosing the same seam |

7 | start = time() |

8 | enlarged = enlarge_naive(img, W_new) |

9 | end = time() |

10 | |

11 | # Can take around 20 seconds |

12 | print("Enlarging(naive) height from %d to %d: %f seconds." \ |

13 | % (W, W_new, end - start)) |

14 | |

15 | plt.imshow(enlarged) |

16 | plt.show() |

The issue with enlarge_naive is that the same seam will be selected again and again, so this low energy seam will be the only to be duplicated.

Another way to get k different seams is to apply the process we used in function reduce, and keeping track of the seams we delete progressively.

The function find_seams(image, k) will find the top k seams for removal iteratively.

The inner workings of the function are a bit tricky so we’ve implemented it for you, but you should go into the code and understand how it works.

This should also help you for the implementation of enlarge.

作业已经帮忙实现了:查找k条不同的接缝,这样就可以根据k条接缝来轮流进行删除了。

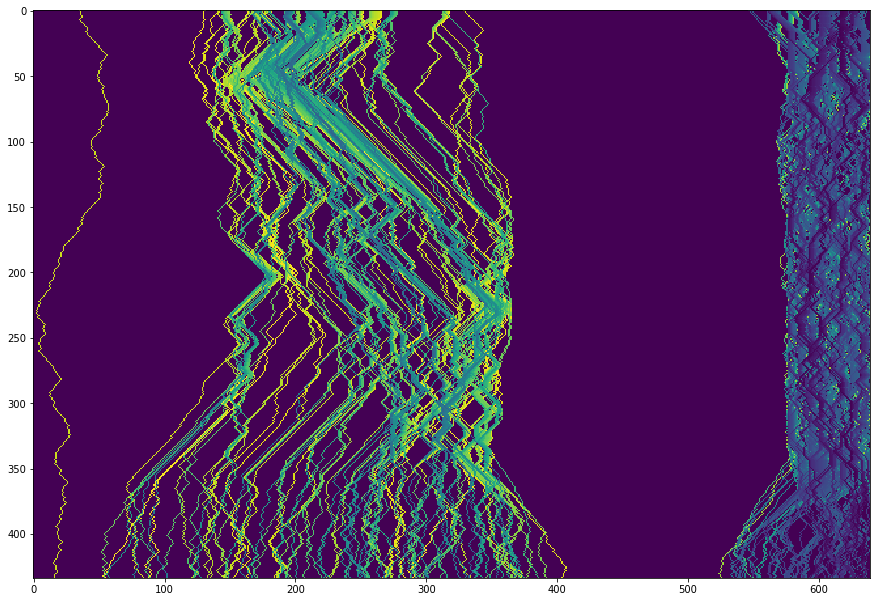

1 | from seam_carving import find_seams |

2 | |

3 | # Alternatively, find k seams for removal and duplicate them. |

4 | start = time() |

5 | seams = find_seams(img, W_new - W) |

6 | end = time() |

7 | |

8 | # Can take around 10 seconds |

9 | print("Finding %d seams: %f seconds." % (W_new - W, end - start)) |

10 | |

11 | plt.imshow(seams, cmap='viridis') |

12 | plt.show() |

不同的颜色是因为按照排序,给每条路线的标记的值不同。

Enlarge

We can see that all the seams found are different, and they avoid the castle and the person.

One issue we can mention is that we cannot enlarge more than we can reduce. Because of our process, the maximum enlargement is the width of the image W because we first need to find W different seams in the image.

One effect we can see on this image is that the blue sky at the right of the castle can only be enlarged x2. The concentration of seams in this area is very strong.

We can also note that the seams at the right of the castle have a blue color, which means they have low value and were removed in priority in the seam selection process.

值得注意的是,对于图像能放大的像素宽度肯定不会超过图像能缩减的像素宽度,毕竟最小能量线就只有那么多条。

实现enlarge函数。

1 | from seam_carving import enlarge |

2 | |

3 | # Let's first test with a small example |

4 | test_img = np.array([[0.0, 1.0, 3.0], |

5 | [0.0, 1.0, 3.0], |

6 | [0.0, 1.0, 3.0]]) |

7 | #test_img = np.arange(9, dtype=np.float64).reshape((3, 3)) |

8 | test_img = np.stack([test_img, test_img, test_img], axis=2) |

9 | assert test_img.shape == (3, 3, 3) |

10 | |

11 | # Increase image width |

12 | W_new = 5 |

13 | |

14 | out_naive = enlarge_naive(test_img, W_new) |

15 | out = enlarge(test_img, W_new) |

16 | |

17 | print("Original image (channel 0):") |

18 | print(test_img[:, :, 0]) |

19 | print("Enlarged naive image (channel 0): first seam is duplicated twice.") |

20 | print(out_naive[:, :, 0]) |

21 | print("Enlarged image (channel 0): first and second seam are each duplicated once.") |

22 | print(out[:, :, 0]) |

23 | |

24 | assert np.allclose(out[:, :, 0], np.array([[0, 0, 1, 1, 3], [0, 0, 1, 1, 3], [0, 0, 1, 1, 3]])) |

1 | W_new = 800 |

2 | |

3 | start = time() |

4 | out = enlarge(img, W_new) |

5 | end = time() |

6 | |

7 | # Can take around 20 seconds |

8 | print("Enlarging width from %d to %d: %f seconds." \ |

9 | % (W, W_new, end - start)) |

10 | |

11 | plt.subplot(2, 1, 1) |

12 | plt.title('Original') |

13 | plt.imshow(img) |

14 | |

15 | plt.subplot(2, 1, 2) |

16 | plt.title('Resized') |

17 | plt.imshow(out) |

18 | |

19 | plt.show() |

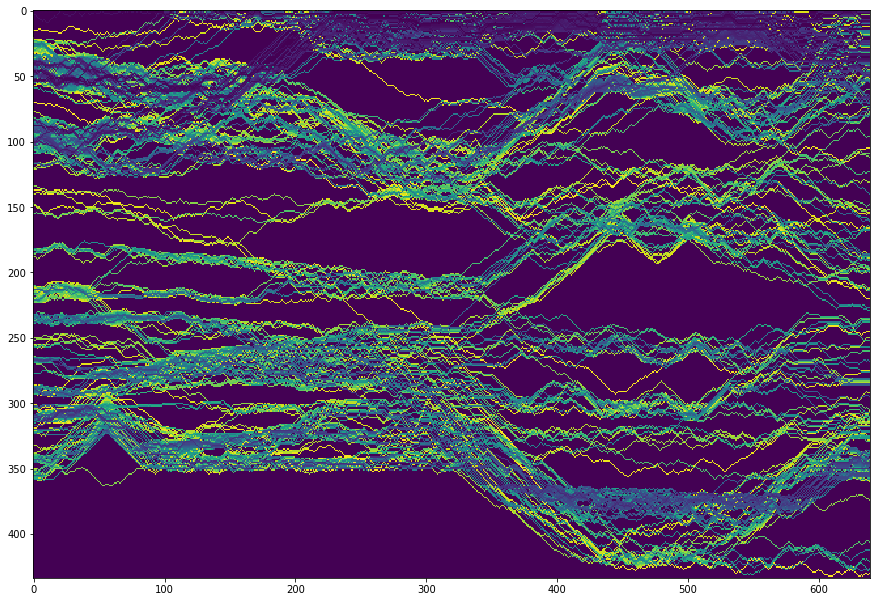

1 | # Map of the seams for horizontal seams. |

2 | start = time() |

3 | seams = find_seams(img, W_new - W, axis=0) |

4 | end = time() |

5 | |

6 | # Can take around 15 seconds |

7 | print("Finding %d seams: %f seconds." % (W_new - W, end - start)) |

8 | |

9 | plt.imshow(seams, cmap='viridis') |

10 | plt.show() |

1 | H_new = 600 |

2 | |

3 | start = time() |

4 | out = enlarge(img, H_new, axis=0) |

5 | end = time() |

6 | |

7 | # Can take around 20 seconds |

8 | print("Enlarging height from %d to %d: %f seconds." \ |

9 | % (H, H_new, end - start)) |

10 | |

11 | plt.subplot(1, 2, 1) |

12 | plt.title('Original') |

13 | plt.imshow(img) |

14 | |

15 | plt.subplot(1, 2, 2) |

16 | plt.title('Resized') |

17 | plt.imshow(out) |

18 | |

19 | plt.show() |

As you can see in the example above, the sky above the castle has doubled in size, the grass below has doubled in size but we still can’t reach a height of 600.

The algorithm then needs to enlarge the castle itself, while trying to avoid enlarging the windows for instance.

Other experiments on the image

Feel free to experiment more on this image, try different sizes to enlarge or reduce, or check what seams are chosen…

Reducing by a 2x factor often leads to weird patterns.

Enlarging by more than 2x is impossible since we only duplicate seams. One solution is to enlarge in mutliple steps (enlarge x1.4, enlarge again x1.4…)

放大两倍或者缩小二分之一图像会变得很奇怪。

这里提出一个方式就是进行多次Resize步骤,每次x1.4倍,x1.4倍这样的方式来放大,缩小同理。

1 | # Reduce image width |

2 | H, W, _ = img.shape |

3 | W_new = 200 |

4 | |

5 | start = time() |

6 | out = reduce(img, W_new) |

7 | end = time() |

8 | |

9 | print("Reducing width from %d to %d: %f seconds." % (W, W_new, end - start)) |

10 | |

11 | plt.subplot(2, 1, 1) |

12 | plt.title('Original') |

13 | plt.imshow(img) |

14 | |

15 | plt.subplot(2, 1, 2) |

16 | plt.title('Resized') |

17 | plt.imshow(out) |

18 | |

19 | plt.show() |

Faster reduce (extra credit 0.5%)

Implement a faster version of reduce called reduce_fast in the file seam_carving.py.

We will have a leaderboard on gradescope with the performance of students.

The autograder tests will check that the outputs match, and run the reduce_fast function on a set of images with varying shapes (say between 200 and 800).

怎么能实现更快地裁剪呢?

题目的提示是:do we really need to compute the whole cost map again at each iteration?

分析了一下reduce的过程,的确有一块是可以改进的。

1 | while out.shape[1] > size: |

2 | energy = efunc(out) |

3 | cost, paths = cfunc(out, energy) |

4 | end = np.argmin(cost[-1]) |

5 | seam = backtrack_seam(paths, end) |

6 | out = remove_seam(out, seam) |

就是计算energy能量图这一步,因为能量图的计算其实就是梯度的计算。

如果一个区域内的图像像素不变动的话,那这个区域内的梯度应该也不会放生改变,基于这一点,可以对计算能量图的步骤进行改进。

只需要对上一次optical seam最小能量线覆盖宽度内的区域进行梯度的重新更新,两侧区域的能量值不需要变动。

比如接缝最左边为left,则left-1处像素不变,梯度改变,left-2处及之前像素不变梯度不变。

比如接缝最右边为right,则right+1处像素不变,梯度改变,left+2处及之后像素不变梯度不变。

然后考虑特殊情况。

1 | from seam_carving import reduce_fast |

2 | |

3 | # Reduce image width |

4 | H, W, _ = img.shape |

5 | W_new = 400 |

6 | |

7 | start = time() |

8 | out = reduce(img, W_new) |

9 | end = time() |

10 | |

11 | print("Normal reduce width from %d to %d: %f seconds." % (W, W_new, end - start)) |

12 | |

13 | start = time() |

14 | out_fast = reduce_fast(img, W_new) |

15 | end = time() |

16 | |

17 | print("Faster reduce width from %d to %d: %f seconds." % (W, W_new, end - start)) |

18 | |

19 | assert np.allclose(out, out_fast), "Outputs don't match." |

20 | |

21 | |

22 | plt.subplot(3, 1, 1) |

23 | plt.title('Original') |

24 | plt.imshow(img) |

25 | |

26 | plt.subplot(3, 1, 2) |

27 | plt.title('Resized') |

28 | plt.imshow(out) |

29 | |

30 | plt.subplot(3, 1, 3) |

31 | plt.title('Faster resized') |

32 | plt.imshow(out) |

33 | |

34 | plt.show() |

Reducing and enlarging on another image

Also check these outputs with another image.

1 | # Load image |

2 | img2 = io.imread('imgs/wave.jpg') |

3 | img2 = util.img_as_float(img2) |

4 | |

5 | plt.title('Original Image') |

6 | plt.imshow(img2) |

7 | plt.show() |

1 | out = reduce(img2, 300) |

2 | plt.imshow(out) |

3 | plt.show() |

1 | out = enlarge(img2, 800) |

2 | plt.imshow(out) |

3 | plt.show() |

Forward Energy

Forward energy is a solution to some artifacts that appear when images have curves for instance.

Implement the function compute_forward_cost. This function will replace the compute_cost we have been using until now.

基本的seam carving 会影响物体的原有结构,比如曲线啊。

因此提出了Forward Energy这个方法。详见 CS131 Lecture8 的PPT P76-P86。

介绍上说的是 Instead of removing the seam of least energy, remove the seam that inserts the least energy to the image !

之前的做法是去除掉具有最小能量的接缝,而Forward Energy的做法是去除掉给整张图像带来最小能量的接缝。

具体的可视化解释在PPT中,这里就放公式了。

之前的能量图定义为

Forward能量图的定义为

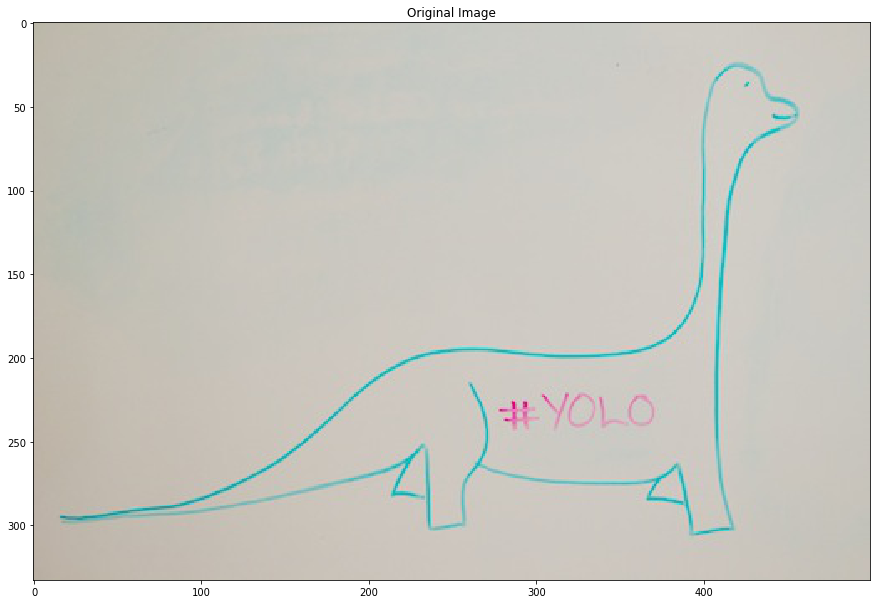

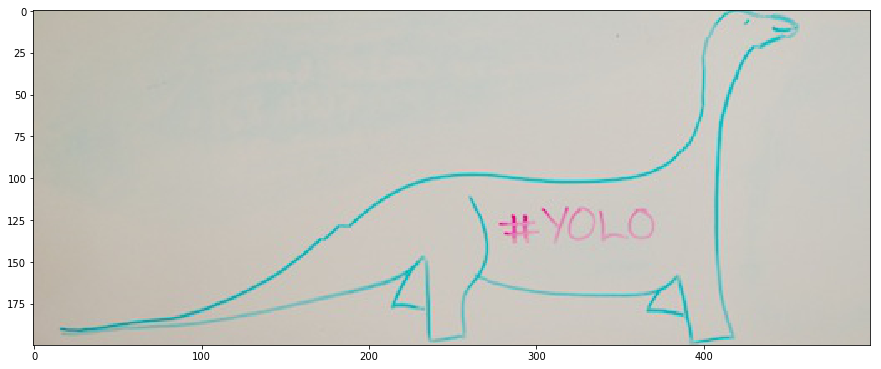

1 | # Load image |

2 | img_yolo = io.imread('imgs/yolo.jpg') |

3 | img_yolo = util.img_as_float(img_yolo) |

4 | |

5 | plt.title('Original Image') |

6 | plt.imshow(img_yolo) |

7 | plt.show() |

1 | from seam_carving import compute_forward_cost |

2 | |

3 | # Let's first test with a small example |

4 | img_test = np.array([[1.0, 1.0, 2.0], |

5 | [0.5, 0.0, 0.0], |

6 | [1.0, 0.5, 2.0]]) |

7 | img_test = np.stack([img_test]*3, axis=2) |

8 | assert img_test.shape == (3, 3, 3) |

9 | |

10 | energy = energy_function(img_test) |

11 | |

12 | solution_cost = np.array([[0.5, 2.5, 3.0], |

13 | [1.0, 2.0, 3.0], |

14 | [2.0, 4.0, 6.0]]) |

15 | |

16 | solution_paths = np.array([[ 0, 0, 0], |

17 | [ 0, -1, 0], |

18 | [ 0, -1, -1]]) |

19 | |

20 | # Vertical Cost Map |

21 | vcost, vpaths = compute_forward_cost(img_test, energy) # don't need the first argument for compute_cost |

22 | |

23 | print("Image:") |

24 | print(color.rgb2grey(img_test)) |

25 | |

26 | print("Energy:") |

27 | print(energy) |

28 | |

29 | print("Cost:") |

30 | print(vcost) |

31 | print("Solution cost:") |

32 | print(solution_cost) |

33 | |

34 | print("Paths:") |

35 | print(vpaths) |

36 | print("Solution paths:") |

37 | print(solution_paths) |

38 | |

39 | assert np.allclose(solution_cost, vcost) |

40 | assert np.allclose(solution_paths, vpaths) |

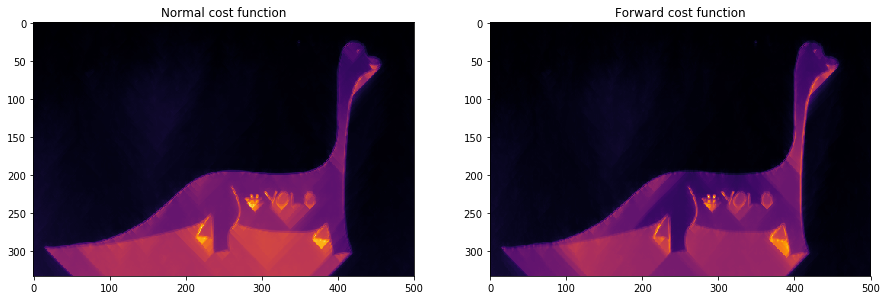

1 | from seam_carving import compute_forward_cost |

2 | |

3 | energy = energy_function(img_yolo) |

4 | |

5 | out, _ = compute_cost(img_yolo, energy) |

6 | plt.subplot(1, 2, 1) |

7 | plt.imshow(out, cmap='inferno') |

8 | plt.title("Normal cost function") |

9 | |

10 | out, _ = compute_forward_cost(img_yolo, energy) |

11 | plt.subplot(1, 2, 2) |

12 | plt.imshow(out, cmap='inferno') |

13 | plt.title("Forward cost function") |

14 | |

15 | plt.show() |

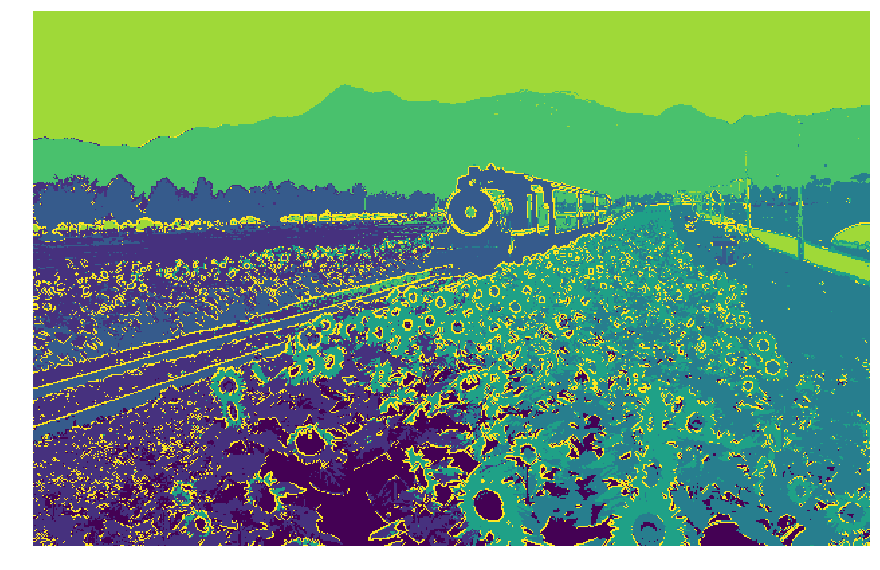

We observe that the forward energy insists more on the curved edges of the image.

可以看到,Forward Energy方法在保留边缘曲线的结构上比原方法好。

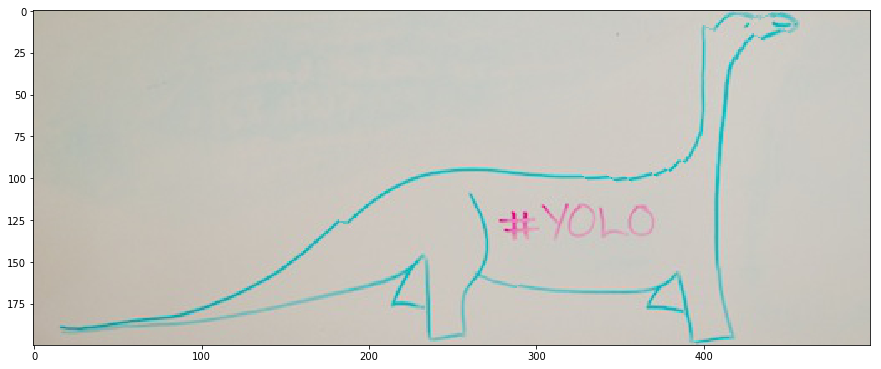

1 | from seam_carving import reduce |

2 | |

3 | start = time() |

4 | out = reduce(img_yolo, 200, axis=0) |

5 | end = time() |

6 | print("Old Solution: %f seconds." % (end - start)) |

7 | |

8 | plt.imshow(out) |

9 | plt.show() |

The issue with our standard reduce function is that it removes vertical seams without any concern for the energy introduced in the image.

In the case of the dinosaur above, the continuity of the shape is broken. The head is totally wrong for instance, and the back of the dinosaure lacks continuity.

Forward energy will solve this issue by explicitely putting high energy on a seam that breaks this continuity and introduces energy.

1 | # This step can take a very long time depending on your implementation. |

2 | start = time() |

3 | out = reduce(img_yolo, 200, axis=0, cfunc=compute_forward_cost) |

4 | end = time() |

5 | print("Forward Energy Solution: %f seconds." % (end - start)) |

6 | |

7 | plt.imshow(out) |

8 | plt.show() |

Object removal (extra credit 0.5%)

Object removal uses a binary mask of the object to be removed.

Using the reduce and enlarge functions you wrote before, complete the function remove_object to output an image of the same shape but without the object to remove.

移除目标和保留目标

移除目标的思路应该很清晰,就是将图像中目标处的像素视为能量最小或最大,即可将目标去除了。

两个问题:

- 如何进行标记,从而每次删除能量线的时候,都能删到并且尽可能多的删到带有标记的能量线?同时坐标下标不会混乱。

- 如何在删除目标之后保持和原图同样的尺寸

解决方式:

- 判断最小能量线的时候,将mask区域乘上负数,自然找到的接缝能量会最小,并且穿过mask区域的像素点会更多。

- 做裁剪的时候,将mask根据接缝和图像一起进行裁剪,自然不会引起下标混乱。

- 删除目标之后再调用之前的enlarge函数,进行图像的放大,放大到原图尺寸。

1 | # Load image |

2 | image = io.imread('imgs/wyeth.jpg') |

3 | image = util.img_as_float(image) |

4 | |

5 | mask = io.imread('imgs/wyeth_mask.jpg', as_grey=True) |

6 | mask = util.img_as_bool(mask) |

7 | |

8 | plt.subplot(1, 2, 1) |

9 | plt.title('Original Image') |

10 | plt.imshow(image) |

11 | |

12 | plt.subplot(1, 2, 2) |

13 | plt.title('Mask of the object to remove') |

14 | plt.imshow(mask) |

15 | |

16 | plt.show() |

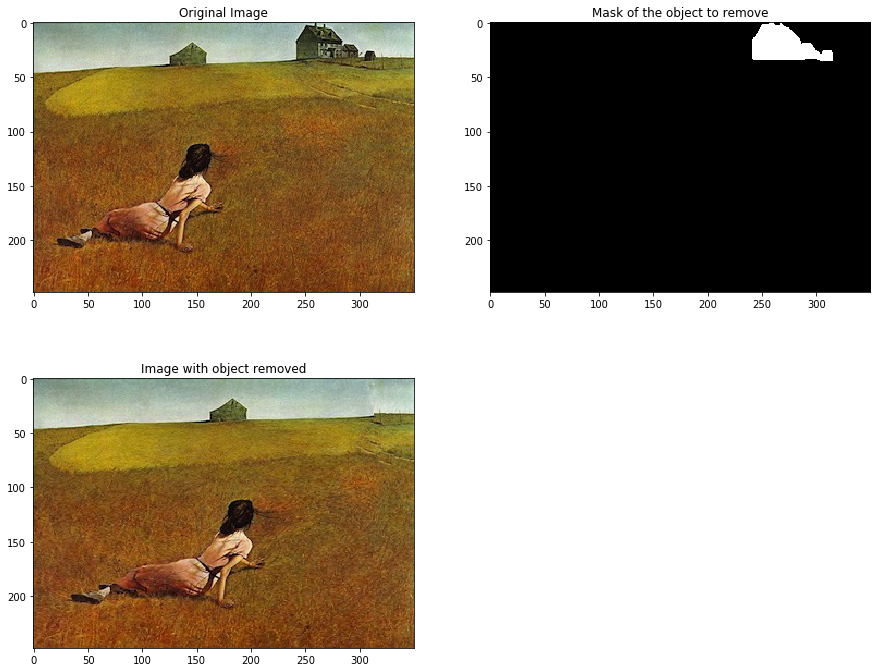

1 | # Use your function to remove the object |

2 | from seam_carving import remove_object |

3 | out = remove_object(image, mask) |

4 | |

5 | plt.subplot(2, 2, 1) |

6 | plt.title('Original Image') |

7 | plt.imshow(image) |

8 | |

9 | plt.subplot(2, 2, 2) |

10 | plt.title('Mask of the object to remove') |

11 | plt.imshow(mask) |

12 | |

13 | plt.subplot(2, 2, 3) |

14 | plt.title('Image with object removed') |

15 | plt.imshow(out) |

16 | |

17 | plt.show() |

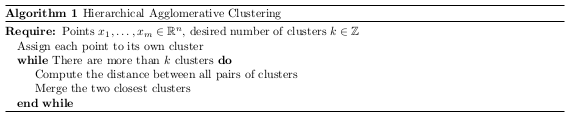

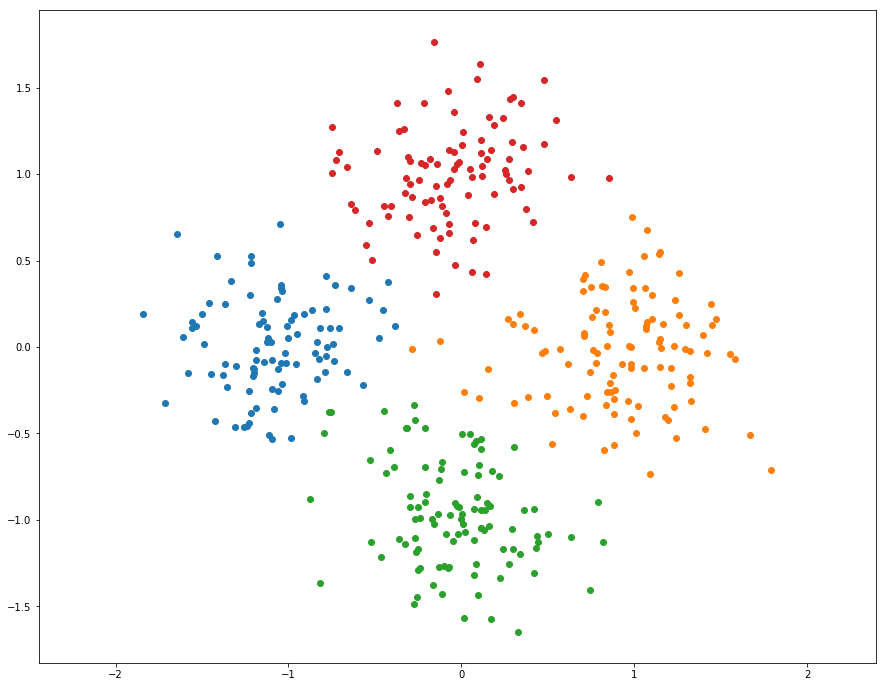

Segmentation-Clustering

实现聚类算法:K-Means和HAC(凝聚聚类算法);

构建图像的特征序列,进行聚类分割;

实现分割结果与GroundTruth的量化评估,根据指标评估聚类算法。

This notebook includes both coding and written questions. Please hand in this notebook file with all the outputs and your answers to the written questions.

This assignment covers K-Means and HAC methods for clustering.

这份作业包含的主题:用于聚类的K-means 和 HAC(层次凝聚聚类)方法,以及聚类算法的量化评估。

Introduction

In this assignment, you will use clustering algorithms to segment images. You will then use these segmentations to identify foreground and background objects.

Your assignment will involve the following subtasks:

- Clustering algorithms: Implement K-Means clustering and Hierarchical Agglomerative Clustering.

- Pixel-level features: Implement a feature vector that combines color and position information and implement feature normalization.

- Quantitative Evaluation: Evaluate segmentation algorithms with a variety of parameter settings by comparing your computed segmentations against a dataset of ground-truth segmentations.

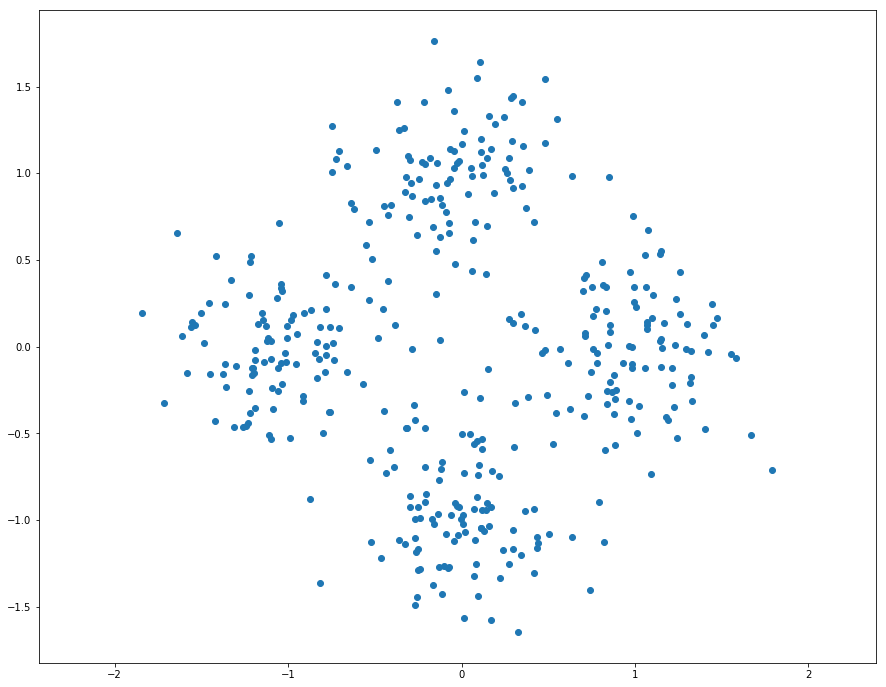

Clustering Algorithms

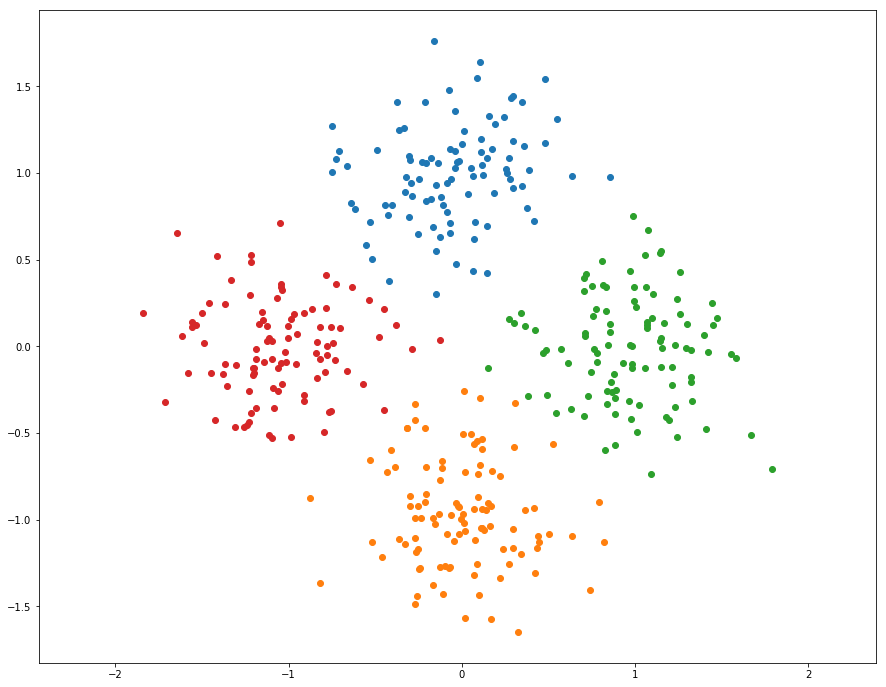

1 | # Generate random data points for clustering |

2 | |

3 | # Cluster 1 |

4 | mean1 = [-1, 0] |

5 | cov1 = [[0.1, 0], [0, 0.1]] |

6 | X1 = np.random.multivariate_normal(mean1, cov1, 100) |

7 | |

8 | # Cluster 2 |

9 | mean2 = [0, 1] |

10 | cov2 = [[0.1, 0], [0, 0.1]] |

11 | X2 = np.random.multivariate_normal(mean2, cov2, 100) |

12 | |

13 | # Cluster 3 |

14 | mean3 = [1, 0] |

15 | cov3 = [[0.1, 0], [0, 0.1]] |

16 | X3 = np.random.multivariate_normal(mean3, cov3, 100) |

17 | |

18 | # Cluster 4 |

19 | mean4 = [0, -1] |

20 | cov4 = [[0.1, 0], [0, 0.1]] |

21 | X4 = np.random.multivariate_normal(mean4, cov4, 100) |

22 | |

23 | # Merge two sets of data points |

24 | X = np.concatenate((X1, X2, X3, X4)) |

25 | |

26 | # Plot data points |

27 | plt.scatter(X[:, 0], X[:, 1]) |

28 | plt.axis('equal') |

29 | plt.show() |

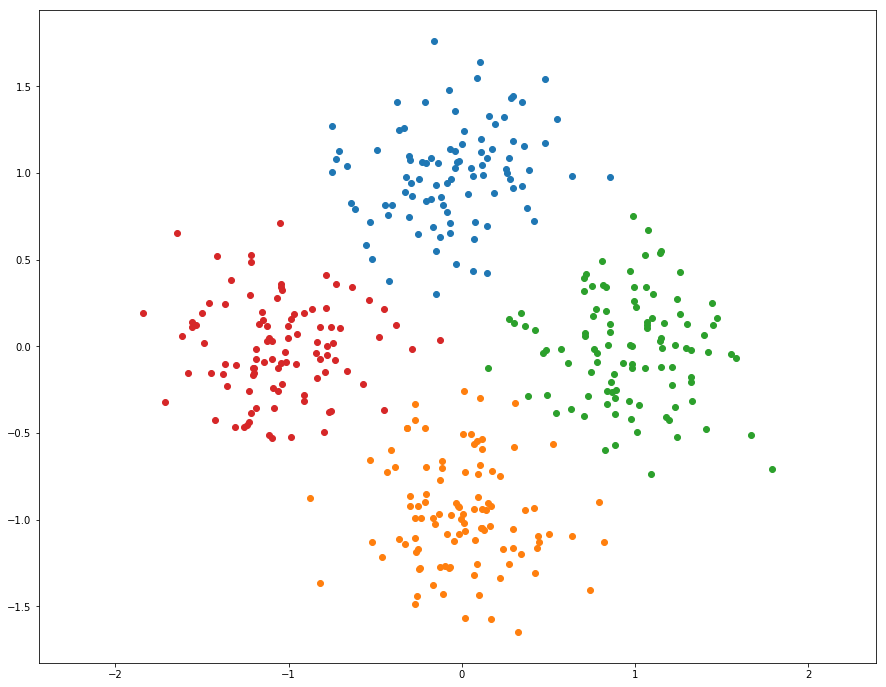

K-Means Clustering

As discussed in class, K-Means is one of the most popular clustering algorithms. We have provided skeleton code for K-Means clustering in the file segmentation.py. Your first task is to finish implementing kmeans in segmentation.py. This version uses nested for loops to assign points to the closest centroid and compute new mean of each cluster.

目前题图允许使用循环实现点到最近中心的分配即可。

K-means的步骤:

- 随机初始化聚类中心

- 将点分配给最近的中心

- 计算每个聚类的新的中心

- 如果聚类的分配不再变动则停止

- 从第二步继续循环

1 | from segmentation import kmeans |

2 | |

3 | np.random.seed(0) |