introduction

keywords

-

supervised learning

Supervised learning, in the context of artificial intelligence (AI) and machine learning, is a type of system in which both input and desired output data are provided. Input and output data are labelled for classification to provide a learning basis for future data processing. -

unsupervised learning

opposite of supervised learning -

classification

Cases such as the digit recognition example, in which the aim is to assign each input vector to one of a finite number of discrete categories -

reinforcement learning

Reinforcement learning is where a system, or agent, tries to maximize some measure of reward while interacting with a dynamic environment. If an action is followed by an increase in the reward, then the system increases the tendency to produce that action. -

regression

If the desired output consists of one or more continuous variables, then the task is called regression

-

error function

-

over-fitting

-

generalization

-

credit assignment

-

regularization

-

maximum likelihood

-

Polynomial Curve Fitting

-

shrinkage methods

-

ridge regression

-

weight decay

-

Minkowski loss

-

differential entropy

In other pattern recognition problems, the training data consists of a set of input vectors x without any corresponding target values. The goal in such unsupervised learning problems may be to discover groups of similar examples within the data, where it is called clustering, or to determine the distribution of data within the input space, known as density estimation, or to project the data from a high-dimensional space down to two or three dimensions for the purpose of visualization.

Polynomial Curve Fitting

suppose that we are given a training set comprising N observations of x, written

together with corresponding observations of the values of t ,denoted

we shall fit the data using a polynomial function of the form

where n is the order of the polynomial, and denotes x raised to the power of j.

The polynomial coefficients , … , are collectively denoted by the vector w.

Note that, although the polynomial function is a nonlinear function of x, it is a linear function of the coefficients w.

The values of the coefficients will be determined by fitting the polynomial to the training data. This can be done by minimizing an error function that measures the misfit between the function , for any given value of w, and the training set data points.

One simple choice of error function, which is widely used, is given by the sum of the squares of the errors between the predictions for each data point and the corresponding target values , so that we minimize

where the factor of 1/2 is included for later convenience. We can solve the curve fitting problem by choosing the value of w for which E(w) is as small as possible.

It is sometimes more convenient to use the root-mean-square

in which the division by N allows us to compare different sizes of data sets on an equal footing, and the square root ensures that is measured on the same scale (and in the same units) as the target variable t.

there is something rather unsatisfying about having to limit the number of parameters in a model according to the size of the available training set. It would seem more reasonable to choose the complexity of the model according to the complexity of the problem being solved. We shall see that the least squares approach to finding the model parameters represents a specific case of maximum likelihood, and that the over-fitting problem can be understood as a general property of maximum likelihood. By adopting a Bayesian approach, the over-fitting problem can be avoided. We shall see that there is no difficulty from a Bayesian perspective in employing models for which the number of parameters greatly exceeds the number of data points. Indeed, in a Bayesian model the effective number of parameters adapts automatically to the size of the data set

For the moment, however, it is instructive to continue with the current approach and to consider how in practice we can apply it to data sets of limited size where we may wish to use relatively complex and flexible models. One technique that is often used to control the over-fitting phenomenon in such cases is that of regularization, which involves adding a penalty term to the error function (1.1.2) in order to discourage the coefficients from reaching large values. The simplest such penalty term takes the form of a sum of squares of all of the coefficients, leading to a modified error function of the form

where , and the coefficient λ governs the relative importance of the regularization term compared with the sum-of-squares error term.

Note that often the coefficient w0 is omitted from the regularizer because its inclusion causes the results to depend on the choice of origin for the target variable (Hastie et al., 2001), or it may be included but with its own regularization coefficient.

Again, the error function in (1.1.4) can be minimized exactly in closed form. Techniques such as this are known in the statistics literature as shrinkage methods because they reduce the value of the coefficients. The particular case of a quadratic regularizer is called ridge regres- sion (Hoerl and Kennard, 1970). In the context of neural networks, this approach is known as weight decay.

Probability Theory

Probability densities

A key concept in the field of pattern recognition is that of uncertainty. It arises both through noise on measurements, as well as through the finite size of data sets. Prob- ability theory provides a consistent framework for the quantification and manipula- tion of uncertainty and forms one of the central foundations for pattern recognition. Combined with decision theory, it allows us to make optimal predictions given all the information available to us, even though that information may be incomplete or ambiguous.

The probability that X will take the value and Y will take the value is written and is called the joint probability of X = and . It is given by the number of points falling in the cell i,j as a fraction of the total number of points, and hence

Here we are implicitly considering the limit . Similarly, the probability that X takes the value irrespective of the value of Y is written as and is given by the fraction of the total number of points that fall in column i, so that

Because the number of instances in column i is just the sum of the number of instances in each cell of that column, we have nij and therefore, from (1.2.1) and (1.2.2), we have

which is the sum rule of probability. Note that is sometimes called the marginal probability, because it is obtained by marginalizing, or summing out, the other variables (in this case Y ).

If we consider only those instances for which , then the fraction of such instances for which is written and is called the conditional probability of given . It is obtained by finding the fraction of those points in column i that fall in cell i,j and hence is given by

From (1.2.1), (1.2.2), (1.2.4) we can then derive the following relationship

which is the product rule of probability.

two fundamental rules of probability theory in the following form.

- sum rule

- product rule

From the product rule, together with the symmetry property , we immediately obtain the following relationship between conditional probabilities

which is called Bayes’ theorem and which plays a central role in pattern recognition and machine learning.

Using the sum rule, the denominator in Bayes’ theorem can be expressed in terms of the quantities appearing in the numerator

We can view the denominator in Bayes’ theorem as being the normalization constant required to ensure that the sum of the conditional probability on the left-hand side of (1.2.8) over all values of Y equals one.

Probability densities

As well as considering probabilities defined over discrete sets of events, we also wish to consider probabilities with respect to continuous variables. If the probability of a real-valued variable x falling in the interval is given by for , then is called the probability density over x. The probability that x will lie in an interval is then given by

Because probabilities are nonnegative, and because the value of x must lie somewhere on the real axis, the probability density p(x) must satisfy the two conditions

- probabilities are nonnegativ

- integrad of probability density is 1

Under a nonlinear change of variable, a probability density transforms differently from a simple function, due to the Jacobian factor. For instance, if we consider a change of variables , then a function becomes . Now consider a probability density that corresponds to a density with respect to the new variable y, where the suffices denote the fact that and are different densities. Observations falling in the range will, for small values of , be transformed into the range where , and hence

One consequence of this property is that the concept of the maximum of a probability density is dependent on the choice of variable.

The probability that x lies in the interval is given by the cumulative distribution function(CDF) defined by

which satisfies

If we have several continuous variables , denoted collectively by the vector x, then we can define a joint probability density such that the probability of x falling in an infinitesimal volume containing the point x is given by . This multivariate probability density must satisfy and in which the integral is taken over the whole of x space. We can also consider joint probability distributions over a combination of discrete and continuous variables.

Note that if x is a discrete variable, then is sometimes called a probability mass function because it can be regarded as a set of ‘probability masses’ concentrated at the allowed values of x.

The sum and product rules of probability, as well as Bayes’ theorem, apply equally to the case of probability densities, or to combinations of discrete and continuous variables. For instance, if x and y are two real variables, then the sum and product rules take the form

- sum rule

- product rule

Expectations and covariances

One of the most important operations involving probabilities is that of finding weighted averages of functions. The average value of some function under a probability distribution is called the expectation of and will be denoted by .

- For a discrete distribution, the average is weighted by the relative probabilities of the different values of x

- For continuous variables, expectations are expressed in terms of an integration with respect to the corresponding probability density

In either case, if we are given a finite number N of points drawn from the probability distribution or probability density, then the expectation can be approximated as a finite sum over these points

Sometimes we will be considering expectations of functions of several variables, in which case we can use a subscript to indicate which variable is being averaged over, so that for instance denotes the average of the function with respect to the distribution of x. Note that will be a function of y.

We can also consider a conditional expectation with respect to a conditional distribution, so that

The variance of f(x) is defined by

Expanding out the square, we see that the variance can also be written in terms of the expectations of and

for variabce of one variable x

for random variables x and y, the covariance is defined by

for two vectors of random variables and , the covariance is a matrix

Bayesian probabilities

So far, we have viewed probabilities in terms of the frequencies of random, repeatable events. We shall refer to this as the classical or frequentist interpretation of probability. Now we turn to the more general Bayesian view, in which probabilities provide a quantification of uncertainty.

we can adopt a similar approach when making inferences about quantities such as the parameters in the polynomial curve fitting example. We capture our assumptions about , before observing the data, in the form of a prior probability distribution . The effect of the observed data is expressed through the conditional probability , how this can be represented explicitly. Bayes’ theorem, which takes the form

then allows us to evaluate the uncertainty in after we have observed D in the form of the posterior probability

The quantity on the right-hand side of Bayes’ theorem is evaluated for the observed data set and can be viewed as a function of the parameter vector , in which case it is called the likelihood function.It expresses how probable the observed data set is for different settings of the parameter vector .

Note that the likelihood is not a probability distribution over , and its integral with respect to does not (necessarily) equal one.

Given this definition of likelihood, we can state Bayes’ theorem in words: where all of these quantities are viewed as functions of .

The denominator in (1.2.23) is the normalization constant, which ensures that the posterior distribution on the left-hand side is a valid probability density and integrates to one. Indeed, integrating both sides of (1.2.23) with respect to , we can express the denominator in Bayes’ theorem in terms of the prior distribution and the likelihood function

In both the Bayesian and frequentist paradigms, the likelihood function plays a central role. However, the manner in which it is used is fundamentally different in the two approaches. In a frequentist setting, is considered to be a fixed parameter, whose value is determined by some form of ‘estimator’, and error bars on this estimate are obtained by considering the distribution of possible data sets . By contrast, from the Bayesian viewpoint there is only a single data set (namely the one that is actually observed), and the uncertainty in the parameters is expressed through a probability distribution over .

A widely used frequentist estimator is maximum likelihood, in which is set to the value that maximizes the likelihood function . This corresponds to choosing the value of for which the probability of the observed data set is maximized. In the machine learning literature, the negative log of the likelihood function is called an error function. Because the negative logarithm is a monotonically decreasing function, maximizing the likelihood is equivalent to minimizing the error.

The Gaussian distribution

For the case of a single real-valued variable x, the Gaussian distribution is defined by

which is governed by two parameters: , called the mean, and , called the variance. The square root of the variance, given by , is called the standard deviation, and the reciprocal of the variance, written as , is called the precision.

From the form of (1.2.25) we see that the Gaussian distribution satisfies . Also it is straightforward to show that the Gaussian is normalized, so that

Proof the form (1.2.26-1.2.29) with standard gaussian distribution(), the common gaussian distribution can be linear transform of the standard one.

gaussian distribution

standard gaussian distribution

let then

let

so

where

then

then

then

image that

then

clearly

as the normal gaussion distribution satisfiestranslate rule:

scale rule:

so the integral of any gaussion distribution density function equals 1

mean of guassion distribution:

gaussian distribution function defined

where and are two parameters, with . By definition of the mean we have

let

let

clearly that, let then the is a symmetric way of that

so and

let

as proofed, the integral of any gaussion distribution density function equals 1,

variance of guassion distribution:

with the same tricks (let ) as before we have

let

thenas so

then , integrad both side

thenas Hopital Rule:

then

then

let

then

let

so

then

then

theorem for gaussion density function

We are also interested in the Gaussian distribution defined over a D-dimensional vector x of continuous variables, which is given by

where the D-dimensional vector is called the mean, the D × D matrix is called the covariance, and denotes the determinant of .

Now suppose that we have a data set of observations , rep- resenting N observations of the scalar variable . Note that we are using the typeface to distinguish this from a single observation of the vector-valued variable , which we denote by . We shall suppose that the observations are drawn independently from a Gaussian distribution whose mean and variance are unknown, and we would like to determine these parameters from the data set. Data points that are drawn independently from the same distribution are said to be independent and identically distributed, which is often abbreviated to i.i.d. We have seen that the joint probability of two independent events is given by the product of the marginal probabilities for each event separately. Because our data set is i.i.d., we can therefore write the probability of the data set, given and , in the form

With the dataset sample m times randomly, get the m group data, denoted

then the likelihood function is

One common criterion for determining the parameters in a probability distribu- tion using an observed data set is to find the parameter values that maximize the likelihood function. This might seem like a strange criterion because, from our fore- going discussion of probability theory, it would seem more natural to maximize the probability of the parameters given the data, not the probability of the data given the parameters.In fact, these two criteria are related, as we shall discuss in the context of curve fitting.

For the moment, however, we shall determine values for the unknown parameters and in the Gaussian by maximizing the likelihood function (1.2.31). In practice, it is more convenient to maximize the log of the likelihood function. Because the logarithm is a monotonically increasing function of its argument, maximization of the log of a function is equivalent to maximization of the function itself. Taking the log not only simplifies the subsequent mathematical analysis, but it also helps numerically because the product of a large number of small probabilities can easily underflow the numerical precision of the computer, and this is resolved by computing instead the sum of the log probabilities.From (1.2.25) and (1.2.31), the log likelihood function can be written in the form

Maximizing (1.2.32) with respect to , that , we obtain the maximum likelihood solution given by

which is the sample mean, i.e., the mean of the observed values . Similarly, maximizing (1.2.32) with respect to , that we obtain the maximum likelihood solution for the variance in the form

which is the sample variance measured with respect to the sample mean

Note that we are performing a joint maximization of (1.2.32) with respect to and , but in the case of the Gaussian distribution the solution for decouples from that for so that we can first evaluate (1.2.33) and then subsequently use this result to evaluate (1.2.34).

We first note that the maximum likelihood solutions and are functions of the data set values . Consider the expectations of these quantities with respect to the data set values, which themselves come from a Gaussian distribution and . It is straightforward to show that

so that on average the maximum likelihood estimate will obtain the correct mean but will underestimate the true variance by a factor .

The intuition behind this result is that aeraged across the N data sets, the mean is correct, but the variance is systematically under-estimated because it is measured relative to the sample mean and not relative to the true mean.

From (1.2.36) it follows that the following estimate for the variance parameter is unbiased

Note that the bias of the maximum likelihood solution becomes less significant as the number N of data points increases, and in the limit the maximum likelihood solution for the variance equals the true variance of the distribution that generated the data. In practice, for anything other than small N, this bias will not prove to be a serious problem. However, throughout this book we shall be interested in more complex models with many parameters, for which the bias problems associated with maximum likelihood will be much more severe. In fact, as we shall see, the issue of bias in maximum likelihood lies at the root of the over-fitting problem that we encountered earlier in the context of polynomial curve fitting.

Prooved of the formula (1.2.37)let sample meal , sample variance , population mean , populatioin variance

consider variance definition without bias

clearly, when devide n, , furthermore

so when consider the bias

Curve fitting re-visited

We have seen how the problem of polynomial curve fitting can be expressed in terms of error minimization. Here we return to the curve fitting example and view it from a probabilistic perspective, thereby gaining some insights into error functions and regularization, as well as taking us towards a full Bayesian treatment.

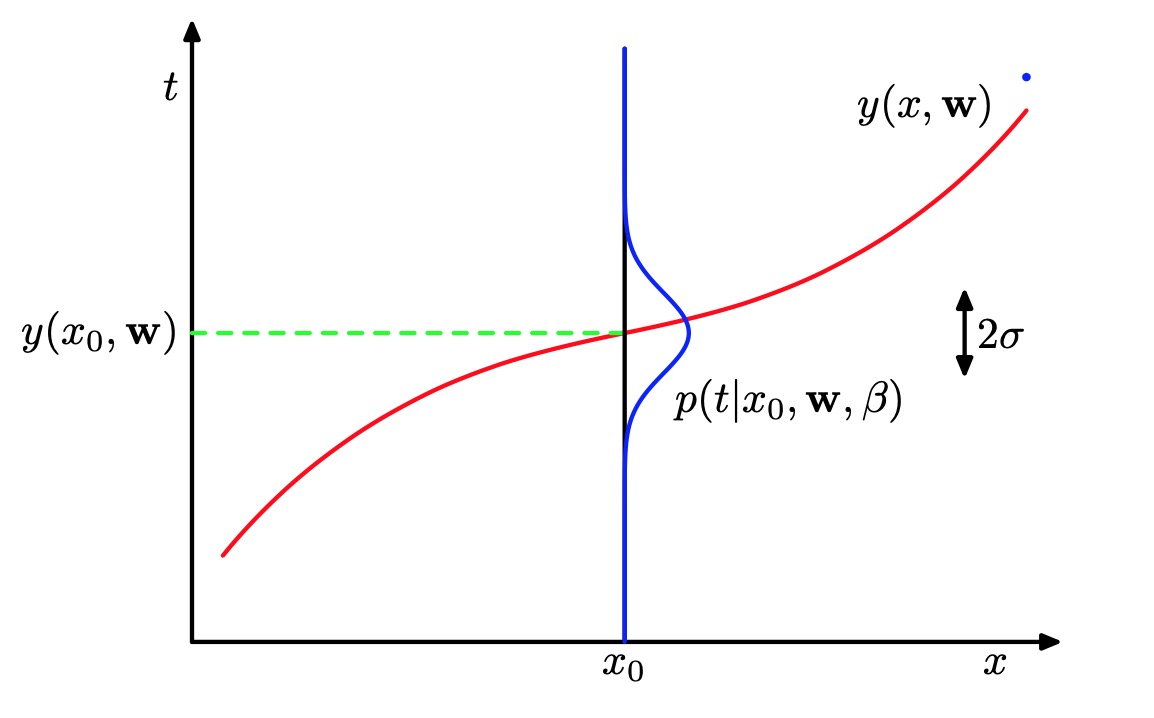

The goal in the curve fitting problem is to be able to make predictions for the target variable t given some new value of the input variable x on the basis of a set of training data comprising N input values and their corresponding target values . We can express our uncertainty over the value of the target variable using a probability distribution. For this purpose, we shall assume that, given the value of , the corresponding value of has a Gaussian distribution with a mean equal to the value of the polynomial curve given Thus we have

where we have defined a precision parameter corresponding to the inverse variance of the distribution.

Schematic illustration of a Gaussian conditional distribution for t given x given by (1.2.25), in which the mean is given by the polynomial function , and the precision is given by the parameter , which is related to the variance by

We now use the training data to determine the values of the unknown parameters and by maximum likelihood. If the data are assumed to be drawn independently from the distribution (1.2.25), then the likelihood function is given by

Substituting for the form of the Gaussian distribution, given by (1.2.25), we obtain the log likelihood function in the form

Consider first the determination of the maximum likelihood solution for the polynomial coefficients, which will be denoted by . These are determined by maximizing (1.2.40) with respect to . For this purpose, we can omit the last two terms on the right-hand side of (1.2.40) because they do not depend on . Also, we note that scaling the log likelihood by a positive constant coefficient does not alter the location of the maximum with respect to , and so we can replace the coefficient β/2 with 1/2. Finally, instead of maximizing the log likelihood, we can equivalently minimize the negative log likelihood. We therefore see that maximizing likelihood is equivalent, so far as determining is concerned, to minimizing the sum-of-squares error function defined by (1.1.2). Thus the sum-of-squares error function has arisen as a consequence of maximizing likelihood under the assumption of a Gaussian noise distribution.

We can also use maximum likelihood to determine the precision parameter β of the Gaussian conditional distribution. Maximizing (1.2.40) with respect to β gives

Again we can first determine the parameter vector governing the mean and subsequently use this to find the precision as was the case for the simple Gaussian distribution.

Having determined the parameters and , we can now make predictions for new values of . Because we now have a probabilistic model, these are expressed in terms of the predictive distribution that gives the probability distribution over t, rather than simply a point estimate, and is obtained by substituting the maximum likelihood parameters into (1.2.38) to give

Now let us take a step towards a more Bayesian approach and introduce a prior distribution over the polynomial coefficients . For simplicity, let us consider a Gaussian distribution of the form

where is the precision of the distribution, and M +1 is the total number of elements in the vector for an order polynomial. Variables such as , which control the distribution of model parameters, are called hyperparameters. Using Bayes’ theorem, the posterior distribution for is proportional to the product of the prior distribution and the likelihood function

We can now determine by finding the most probable value of given the data, in other words by maximizing the posterior distribution. This technique is called maximum posterior, or simply MAP. Taking the negative logarithm of (1.2.44) and combining with (1.2.40) and (1.2.43), we find that the maximum of the posterior is given by the minimum of

Thus we see that maximizing the posterior distribution is equivalent to minimizing the regularized sum-of-squares error function encountered earlier in the form (1.1.4), with a regularization parameter given by .

Bayesian curve fitting

Although we have included a prior distribution , we are so far still making a point estimate of and so this does not yet amount to a Bayesian treatment. In a fully Bayesian approach, we should consistently apply the sum and product rules of probability, which requires, as we shall see shortly, that we integrate over all values of . Such marginalizations lie at the heart of Bayesian methods for pattern recognition.

In the curve fitting problem, we are given the training data and , along with a new test point , and our goal is to predict the value of . We therefore wish to evaluate the predictive distribution . Here we shall assume that the parameters and are fixed and known in advance (in later chapters we shall discuss how such parameters can be inferred from data in a Bayesian setting).

A Bayesian treatment simply corresponds to a consistent application of the sum and product rules of probability, which allow the predictive distribution to be written in the form

Here is given by (1.2.38), and we have omitted the dependence on and to simplify the notation.Here is the posterior distribution over param- eters, and can be found by normalizing the right-hand side of (1.2.44). We shall see that for problems such as the curve-fitting example, this posterior distribution is a Gaussian and can be evaluated analytically. Similarly, the integration in (1.2.46) can also be performed analytically with the result that the predictive distribution is given by a Gaussian of the form

where the mean and variance are given by

mean:

variance:

here the matrix S is given by

where is the unit matrix, and we have defined the vector with elements for .

We see that the variance, as well as the mean, of the predictive distribution in (1.2.46) is dependent on .

Model Selectioin

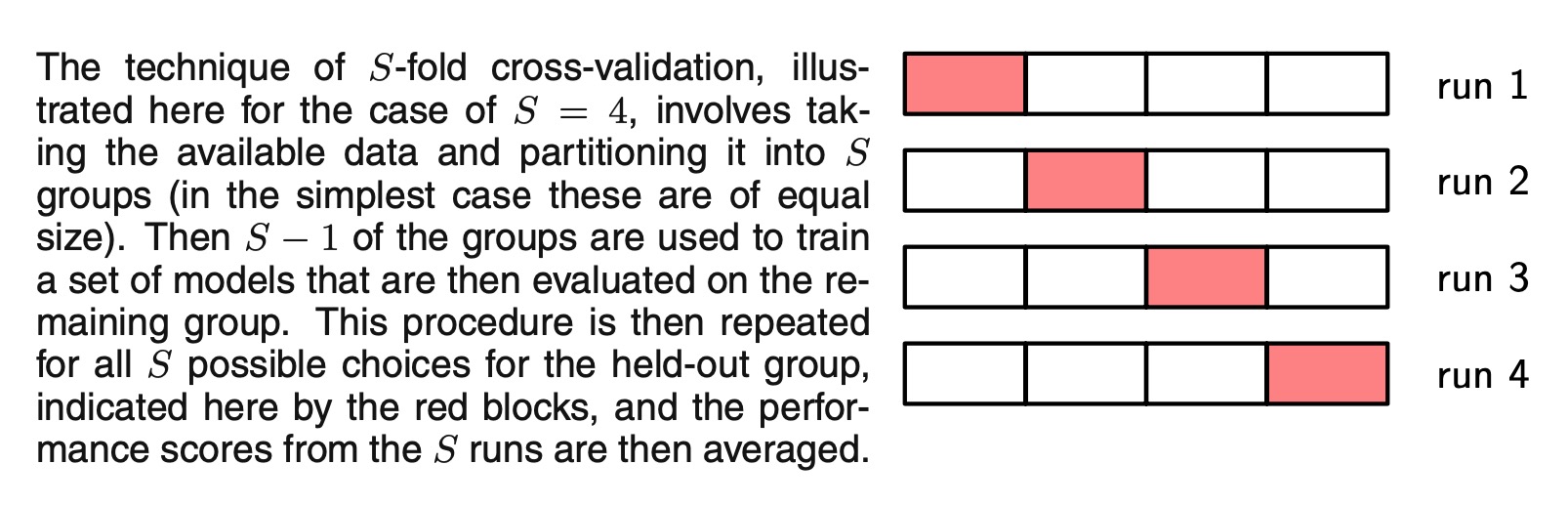

In our example of polynomial curve fitting using least squares, we saw that there was an optimal order of polynomial that gave the best generalization. The order of the polynomial controls the number of free parameters in the model and thereby governs the model complexity. With regularized least squares, the regularization coefficient also controls the effective complexity of the model, whereas for more complex models, such as mixture distributions or neural networks there may be multiple pa- rameters governing complexity. In a practical application, we need to determine the values of such parameters, and the principal objective in doing so is usually to achieve the best predictive performance on new data. Furthermore, as well as find- ing the appropriate values for complexity parameters within a given model, we may wish to consider a range of different types of model in order to find the best one for our particular application.

In many applications, however, the supply of data for training and testing will be limited, and in order to build good models, we wish to use as much of the available data as possible for training. However, if the validation set is small, it will give a relatively noisy estimate of predictive performance. One solution to this dilemma is to use cross-validation, which is illustrated as follow. This allows a proportion (S − 1)/S of the available data to be used for training while making use of all of the data to assess performance.When data is particularly scarce, it may be appropriate to consider the case S = N , where N is the total number of data points, which gives the leave-one-out technique.

The Curse of Dimensionality

In the polynomial curve fitting example we had just one input variable . For practical applications of pattern recognition, however, we will have to deal with spaces of high dimensionality comprising many input variables. As we now discuss, this poses some serious challenges and is an important factor influencing the design of pattern recognition techniques.

Decision Thory

We have seen that how probability theory provides us with a consistent mathematical framework for quantifying and manipulating uncertainty. Here we turn to a discussion of decision theory that, when combined with probability theory, allows us to make optimal decisions in situations involving uncertainty such as those encountered in pattern recognition.

Suppose we have an input vector together with a corresponding vector of target variables, and our goal is to predict given a new value for . For regression problems, will comprise continuous variables, whereas for classification problems it will represent class labels.The joint probability distribution provides a complete summary of the uncertainty associated with these variables.

Minimizing the misclassification rate

Suppose that our goal is simply to make as few misclassifications as possible. We need a rule that assigns each value of to one of the available classes. Such a rule will divide the input space into regions called decision regions, one for each class, such that all points in are assigned to class . The boundaries between decision regions are called decision boundaries or decision surfaces. Note that each decision region need not be contiguous but could comprise some number of disjoint regions.

For the more general case of K classes, it is slightly easier to maximize the probability of being correct, which is given by

which is maximized when the regions are chosen such that each is assigned to the class for which is largest.Again, using the product rule , and noting that the factor of is common to all terms, we see that each should be assigned to the class having the largest posterior probability .

Minimizing the expected loss

For many applications, our objective will be more complex than simply mini- mizing the number of misclassifications.

We can formalize classifier through the introduction of a loss function, also called a cost function, which is a single, overall measure of loss incurred in taking any of the available decisions or actions.Our goal is then to minimize the total loss incurred.

The optimal solution is the one which minimizes the loss function. However, the loss function depends on the true class, which is unknown. For a given input vector , our uncertainty in the true class is expressed through the joint probability distribution and so we seek instead to minimize the average loss, where the average is computed with respect to this distribution, which is given by

Each can be assigned independently to one of the decision regions .Our goal is to choose the regions in order to minimize the expected loss, which implies that for each we should minimize .As before, we can use the product rule to eliminate the common factor of p(x). Thus the decision rule that minimizes the expected loss is the one that assigns each new to the class for which the quantity is a minimum. This is clearly trivial to do, once we know the posterior class probabilities .

The reject option

We have seen that classification errors arise from the regions of input space where the largest of the posterior probabilities is significantly less than unity, or equivalently where the joint distributions have comparable values. In some applications, it will be appropriate to avoid making decisions on the difficult cases in anticipation of a lower error rate on those examples for which a classification decision is made. This is known as the reject option.

Inference and decision

We have broken the classification problem down into two separate stages

the inference stage in which we use training data to learn a model for

the decision stage in which we use these posterior probabilities to make op- timal class assignments.

To solving decision problems, find a function , called a discriminant function, which maps each input directly onto a class label. For instance, in the case of two-class problems, might be binary valued and such that represents class and represents class . In this case, probabilities play no role, we no longer have access to the posterior probabilities .There are many powerful reasons for wanting to compute the posterior probabilities, even if we subsequently use them to make decisions. These include:

- Minimizing risk.

Consider a problem in which the elements of the loss matrix are subjected to revision from time to time.If we know the posterior probabilities, we can trivially revise the minimum risk decision criterion by modifying appropriately.If we have only a discriminant function, then any change to the loss matrix would require that we return to the training data and solve the classification problem afresh - Rejectoption.

Posterior probabilities allow us to determine a rejection criterion that will minimize the misclassification rate, or more generally the expected loss, for a given fraction of rejected data points. - Compensating for class priors.

A balanced data set in which we have selected equal numbers of exam- ples from each of the classes would allow us to find a more accurate model. However, we then have to compensate for the effects of our modifications to the training data. Suppose we have used such a modified data set and found models for the posterior probabilities. From Bayes’ theorem, we see that the posterior probabilities are proportional to the prior probabilities, which we can interpret as the fractions of points in each class. We can therefore simply take the posterior probabilities obtained from our artificially balanced data set and first divide by the class fractions in that data set and then multiply by the class fractions in the population to which we wish to apply the model. Finally, we need to normalize to ensure that the new posterior probabilities sum to one. Note that this procedure cannot be applied if we have learned a discriminant function directly instead of determining posterior probabilities. - **Combining models. **

For complex applications, we may wish to break the problem into a number of smaller subproblems each of which can be tackled by a sep- arate module.

Loss functions for regression

So far, we have discussed decision theory in the context of classification prob- lems. We now turn to the case of regression problems, such as the curve fitting example discussed earlier. The decision stage consists of choosing a specific estimate of the value of for each input .Suppose that in doing so, we incur a loss . The average, or expected, loss is then given by

A common choice of loss function in regression problems is the squared loss given by . In this case, the expected loss can be written

Our goal is to choose y(x) so as to minimize . If we assume a completely flexible function , we can do this formally using the calculus of variations to give

Solving for , and using the sum and product rules of probability, we obtain

which is the conditional average of conditioned on and is known as the regression function.To multiple target variables represented by the vector , in which case the optimal solution is the conditional average .We can also derive this result in a slightly different way, which will also shed light on the nature of the regression problem. Armed with the knowledge that the optimal solution is the conditional expectation, we can expand the square term as follows

where, to keep the notation uncluttered, we use to denote .Substituting into the loss function and performing the integral over t, we see that the cross-term vanishes and we obtain an expression for the loss function in the form

The function we seek to determine enters only in the first term, which will be minimized when is equal to , in which case this term will vanish. This is simply the result that we derived previously and that shows that the optimal least squares predictor is given by the conditional mean. The second term is the variance of the distribution of , averaged over . It represents the intrinsic variability of the target data and can be regarded as noise. Because it is independent of , it represents the irreducible minimum value of the loss function.As with the classification problem, we can either determine the appropriate prob- abilities and then use these to make optimal decisions, or we can build models that make decisions directly. Indeed, we can identify three distinct approaches to solving regression problems given, in order of decreasing complexity,by

(a). First solve the inference problem of determining the joint density . Then normalize to find the conditional density , and finally marginalize to find the conditional mean given by (1.3.4)

(b). First solve the inference problem of determining the conditional density , and then subsequently marginalize to find the conditional mean given by (1.3.4)

©. Find a regression function directly from the training data.

The relative merits of these three approaches follow the same lines as for classifica- tion problems above.

The squared loss is not the only possible choice of loss function for regression. Indeed, there are situations in which squared loss can lead to very poor results and where we need to develop more sophisticated approaches. An important example concerns situations in which the conditional distribution is multimodal, as often arises in the solution of inverse problems. Here we consider briefly one simple generalization of the squared loss, called the Minkowski loss, whose expectation is given by

which reduces to the expected squared loss for .The minimum of is given by the conditional mean for , the conditional median for , and the conditional mode for .

Information Theory

n this chapter, we have discussed a variety of concepts from probability theory and decision theory that will form the foundations for much of the subsequent discussion in this book. We close this chapter by introducing some additional concepts from the field of information theory, which will also prove useful in our development of pattern recognition and machine learning techniques.

We begin by considering a discrete random variable and we ask how much information is received when we observe a specific value for this variable. The amount of information can be viewed as the ‘degree of surprise’ on learning the value of . If we are told that a highly improbable event has just occurred, we will have received more information than if we were told that some very likely event has just occurred, and if we knew that the event was certain to happen we would receive no information. Our measure of information content will therefore depend on the probability distribution , and we therefore look for a quantity that is a monotonic function of the probability and that expresses the information content. The form of can be found by noting that if we have two events and that are unrelated, then the information gain from observing both of them should be the sum of the information gained from each of them separately, so that . Two unrelated events will be statistically independent and so . From these two relationships, it is easily shown that must be given by the logarithm of and so we have

where the negative sign ensures that information is positive or zero.Note that low probability events x correspond to high information content. The choice of basis for the logarithm is arbitrary, and for the moment we shall adopt the convention prevalent in information theory of using logarithms to the base of 2.

Now suppose that a sender wishes to transmit the value of a random variable to a receiver. The average amount of information that they transmit in the process is obtained by taking the expectation of (1.4.1) with respect to the distribution and is given by

This important quantity is called the entropy of the random variable x.

Note that and so we shall take whenever we encounter a value for such that .

We now show that these definitions indeed possess useful properties. Consider a random variable x having 8 possible states, each of which is equally likely. In order to communicate the value of x to a receiver, we would need to transmit a message of length 3 bits. Notice that the entropy of this variable is given by

For a density defined over multiple continuous variables, denoted collectively by the vector , the differential entropy is given by

In the case of discrete distributions, we saw that the maximum entropy configuration corresponded to an equal distribution of probabilities across the possible states of the variable.Let us now consider the maximum entropy configuration for a continuous variable.

Omit the proof process, the distribution that maximizes the differential entropy is the Gaussian.If we evaluate the differential entropy of the Gaussian, we obtain

proof as follow

as prooved

for single variant

so

let , then

as , that and is symmetric in

thenfor multi-variant

if , with Mahalanobis transform let

then , that means is the normalization gaussion distribution

as

and

so

so

Suppose we have a joint distribution from which we draw pairs of values of and . If a value of is already known, then the additional information needed to specify the corresponding value of is given by . Thus the average additional information needed to specify can be written as

which is called the conditional entropy of given .

It is easily seen, using the product rule, that the conditional entropy satisfies the relation

where is the differential entropy of and is the differential entropy of the marginal distribution . Thus the information needed to describe and is given by the sum of the information needed to describe alone plus the additional information required to specify given .

Relative entropy and mutual information

So far in this section, we have introduced a number of concepts from information theory, including the key notion of entropy. We now start to relate these ideas to pattern recognition. Consider some unknown distribution , and suppose that we have modelled this using an approximating distribution . If we use to construct a coding scheme for the purpose of transmitting values of to a receiver, then the average additional amount of information (in nats) required to specify the value of (assuming we choose an efficient coding scheme) as a result of using instead of the true distribution is given by

This is known as the relative entropy or Kullback-Leibler divergence or KL divergence, between the distributions and .

Probability Distributions

We have emphasized the central role played by probability theory in the solution of pattern recognition problems. We turn now to an exploration of some particular examples of probability distributions and their properties. As well as being of great interest in their own right, these distributions can form building blocks for more complex models and will be used extensively throughout the entire. The distributions introduced in this part will also serve another important purpose, namely to provide us with the opportunity to discuss some key statistical concepts, such as Bayesian inference, in the context of simple models before we encounter them in more complex situations in later chapters.

Binary Variables

We begin by considering a single binary random variable . For example, might describe the outcome of flipping a coin, with representing ‘heads’, and representing ‘tails’. We can imagine that this is a damaged coin so that the probability of landing heads is not necessarily the same as that of landing tails. The probability of will be denoted by the parameter so that

where , from which it follows that . The probability distribution over x can therefore be written in the form

which is known as the Bernoulli distribution. It is easily verified that this distribution is normalized and that it has mean and variance given by

Now suppose we have a data set of observed values of . We can construct the likelihood function, which is a function of , on the assumption that the observations are drawn independently from , so that

In a frequentist setting, we can estimate a value for by maximizing the likelihood function, or equivalently by maximizing the logarithm of the likelihood. In the case of the Bernoulli distribution, the log likelihood function is given by

At this point, it is worth noting that the log likelihood function depends on the N observations only through their sum .This sum provides an example of a sufficient statistic for the data under this distribution, and we shall study the impor- tant role of sufficient statistics in some detail.If we set the derivative of ln with respect to equal to zero , we obtain the maximum likelihood estimator , which is also known as the sample mean. If we denote the number of observations of (heads) within this data set by , then we can write in the form . So that the probability of landing heads is given, in this maximum likelihood frame- work, by the fraction of observations of heads in the data set.

Now suppose we flip a coin, say, 3 times and happen to observe 3 heads. Then and . In this case, the maximum likelihood result would predict that all future observations should give heads. Common sense tells us that this is unreasonable, and in fact this is an extreme example of the over-fitting associated with maximum likelihood. We shall see shortly how to arrive at more sensible conclusions through the introduction of a prior distribution over .

We can also work out the distribution of the number m of observations of x = 1, given that the data set has size N. This is called the binomial distribution and that it is proportional to . In order to obtain the normalization coefficient we note that out of N coin flips, we have to add up all of the possible ways of obtaining m heads, so that the binomial distribution can be written is the number of ways of choosing m objects out of a total of N identical objects.

formal mean

formal variance

The beta distribution

We have seen that the maximum likelihood setting for the parameter in the Bernoulli distribution, and hence in the binomial distribution, is given by the fraction of the observations in the data set having . As we have already noted,

this can give severely over-fitted results for small data sets. In order to develop a Bayesian treatment for this problem, we need to introduce a prior distribution over the parameter .

Here we consider a form of prior distribution that has a simple interpretation as well as some useful analytical properties. To motivate this prior, we note that the likelihood function takes the form of the product of factors of the form .We choose a prior to be proportional to powers of and , then the posterior distribution, which is proportional to the product of the prior and the likelihood function, will have the same functional form as the prior. This property is called conjugacy. We therefore choose a prior, called the beta distribution, given by

Gamma Function . For the gamma function

where is the gamma function, and the coefficient in (2.1.5) ensures that the beta distribution is normalized, so that

The mean and variance of the beta distribution are given by

the mean:

the variance:

The parameters and are often called hyperparameters because they control the distribution of the parameter .

The posterior distribution of is now obtained by multiplying the beta prior (2.1.5) by the binomial likelihood function and normalizing. Keeping only the factors that depend on , we see that this posterior distribution has the form .where , and therefore corresponds to the number of ‘tails’ in the coin example.Indeed, it is simply another beta distribution, and its normalization coefficient can therefore be obtained by comparison with (2.1.5) to give

We see that the effect of observing a data set of observations of and observations of has been to increase the value of by , and the value of by , in going from the prior distribution to the posterior distribution.This allows us to provide a simple interpretation of the hyperparameters and in the prior as an effective number of observations of and , respectively. Note that and need not be integers.

If our goal is to predict, as best we can, the outcome of the next trial, then we must evaluate the predictive distribution of , given the observed data set . From the sum and product rules of probability, this takes the form

Using the result (2.1.7) for the posterior distribution , together with the result for the mean of the beta distribution, we obtain . which has a simple interpretation as the total fraction of observations (both real observations and fictitious prior observations) that correspond to .

Multinomial Variables

Binary variables can be used to describe quantities that can take one of two possible values. Often, however, we encounter discrete variables that can take on one of K possible mutually exclusive states.Although there are various alternative ways to express such variables, we shall see shortly that a particularly convenient representation is the 1-of-K scheme in which the variable is represented by a K-dimensional vector in which one of the elements equals 1, and all remaining elements equal 0. So, for instance if we have a variable that can take states and a particular observation of the variable happens to correspond to the state where , then x will be represented by . Note that such vectors satisfy . If we denote the probability of by the parameter , then the distribution of given

where , and the parameters are constrained to satisfy and

The distribution (2.2.1) can be regarded as a generalization of the Bernoulli distribution to more than two outcomes.

It is easily seen that the distribution is normalized

and that

Now consider a data set of independent observations . The corresponding likelihood function takes the form

We see that the likelihood function depends on the N data points only through the quantities . Which represent the number of observations of . These are called the sufficient statistics for this distribution.

In order to find the maximum likelihood solution for , we need to maximize with respect to taking account of the constraint that the must sum to 1. This can be achieved using a Lagrange multiplier and maximizing

Setting the derivative of (2.2.5) with respect to to zero , we obtain

We can solve for the Lagrange multiplier λ by substituting (2.2.6) into the constraint to to give , Thus we obtain the maximum likelihood solution in the form

which is the fraction of the N observations for which .

We can consider the joint distribution of the quantities , conditioned on the parameters and on the total number N of observations. From (2.2.4) this takes the form

which is known as the multinomial distribution.

Note that the variables are subject to the constraint

The Dirichlet distribution

We now introduce a family of prior distributions for the parameters {μk} of the multinomial distribution (2.2.7).By inspection of the form of the multinomial distribution, we see that the conjugate prior is given by

where and . Here are the parameters of the distribution, and denotes . Note that, because of the summation constraint, the distribution over the space of the is confined to a simplex of dimensionality K − 1. The normalized form for this distribution is by

which is called the Dirichlet distribution, and

Multiplying the prior (2.2.10) by the likelihood function (2.2.8), we obtain the posterior distribution for the parameters in the form

We see that the posterior distribution again takes the form of a Dirichlet distribution, confirming that the Dirichlet is indeed a conjugate prior for the multinomial. This allows us to determine the normalization coefficient by comparison with (2.2.10) so that

where we have denoted . As for the case of the binomial distribution with its beta prior, we can interpret the parameters αk of the Dirichlet prior as an effective number of observations of .

Note that two-state quantities can either be represented as binary variables and modelled using the binomial distribution or as 1-of-2 variables and modelled using the multinomial distribution(2.2.8) with K = 2

The Gaussian Distribution

The Gaussian, also known as the normal distribution, is a widely used model for the distribution of continuous variables. In the case of a single variable , the Gaussian distribution can be written in the form

where is the mean and is the variance. For a D-dimensional vector , the multivariate Gaussian distribution takes the form

where is a D-dimensional mean vector, Σ is a covariance matrix, and denotes the determinant of .

Deducing

For arbitrary , transform by , then we get

Consider the N indepedent variant, such as the N-dimentional column vector , corresponding variance is , with the product rule, we have that

For the exponent part, called the Mahalanobis distance from to and reduces to the Euclidean distance when is the identity matrix. , make the matrix transform, then

First of all, we note that the matrix can be taken to be symmetric, without loss of generality, because any antisymmetric component would disappear from the exponent. Now consider the eigenvector equation for the covariance matrix

where . Because is a real, symmetric matrix its eigenvalues will be real, and its eigenvectors can be chosen to form an orthonormal set, so that

where is the element of the identity matrix and satisfies

The covariance matrix can be expressed as an expansion in terms of its eigenvectors in the form

and similarly the inverse covariance matrix can be expressed as

Then the in the deducing part quadratic form becomes

where we have defined

we can interpret as a new coordinate system defined by the orthonormal vectors that are shifted and rotated with respect to the original coordinates. Forming the vector , we have

where U is a matrix whose rows are given by . From (2.3.4) it follows that U is an orthogonal matrix, i.e., it satisfies , and hence also , where I is the identity matrix.

The quadratic form, and hence the Gaussian density, will be constant on surfaces for which (2.3.9.0) is constant. If all of the eigenvalues are positive, then these surfaces represent ellipsoids, with their centres at and their axes oriented along , and with scaling factors in the directions of the axes given by

For the Gaussian distribution to be well defined, it is necessary for all of the eigenvalues of the covariance matrix to be strictly positive, otherwise the distribution cannot be properly normalized. A matrix whose eigenvalues are strictly positive is said to be positive definite. If all of the eigenvalues are nonnegative, then the covariance matrix is said to be positive semidefinite.

Now consider the form of the Gaussian distribution in the new coordinate system defined by the . In going from the x to the y coordinate system, we have a Jacobian matrix J with elements given by

where are the elements of the matrix . Using the orthonormality property of the matrix U, we see that the square of the determinant of the Jacobian matrix is

and hence = 1. Also, the determinant of the covariance matrix can be written as the product of its eigenvalues, and hence

Thus in the coordinate system, the Gaussian distribution takes the form

which is the product of D independent univariate Gaussian distributions. The eigenvectors therefore define a new set of shifted and rotated coordinates with respect to which the joint probability distribution factorizes into a product of independent distributions. The integral of the distribution in the y coordinate system is then

where we have used the result (1.2.26) for the normalization of the univariate Gaussian. This confirms that the multivariate Gaussian (2.3.2) is indeed normalized.

Linear Models for Regression

The focus so far in this book has been on unsupervised learning, including topics such as density estimation and data clustering. We turn now to a discussion of super- vised learning, starting with regression.

GOAL

The goal of regression is to predict the value of one or more continuous target variables t given the value of a D-dimensional vec- tor x of input variables.

The simplest form of linear regression models are also linear functions of the input variables. However, we can obtain a much more useful class of functions by taking linear combinations of a fixed set of nonlinear functions of the input variables, known as basis functions.Such models are linear functions of the parameters, which gives them simple analytical properties, and yet can be nonlinear with respect to the input variables.

Given a training data set comprising N observations , where n = 1, . . . , N , together with corresponding target values , the goal is to predict the value of for a new value of . In the simplest approach, this can be done by directly constructing an appropriate function whose values for new inputs constitute the predictions for the corresponding values of . More generally, from a probabilistic perspective, we aim to model the predictive distribution because this expresses our uncertainty about the value of for each value of .

Evaluation

Although linear models have significant limitations as practical techniques for pattern recognition, particularly for problems involving input spaces of high dimen- sionality, they have nice analytical properties and form the foundation for more sophisticated models to be discussed in later chapters.

Linear Basis Function Models

The simplest linear model for regression is one that involves a linear combination of the input variables

where . This is often simply known as linear regression.Thekey property of this model is that it is a linear function of the parameters . It is also, however, a linear function of the input variables , and this imposes significant limitations on the model. We therefore extend the class of models by considering linear combinations of fixed nonlinear functions of the input variables, of the form

where are known as basis functions. By denoting the maximum value of the index j by M − 1, the total number of parameters in this model will be M.

The parameter allows for any fixed offset in the data and is sometimes called a bias parameter.It is often convenient to define an additional dummy ‘basis function so that

where and .

By using nonlinear basis functions, we allow the function to be a nonlinear function of the input vector .

The example of polynomial regression is a particular example of this model in which there is a single input variable , and the basis functions take the form of powers of x so that . One limitation of polynomial basis functions is that they are global functions of the input variable, so that changes in one region of input space affect all other regions. This can be resolved by dividing the input space up into regions and fit a different polynomial in each region, leading to spline functions.

Basis Function Set

There are many other possible choices for the basis functions, for example

where the govern the locations of the basis functions in input space, and the parameter governs their spatial scale.

Another possibility is the sigmoidal basis function of the form

where is the logistic sigmoid function defined by

Yet another possible choice of basis function is the Fourier basis, which leads to an expansion in sinusoidal functions. Each basis function represents a specific fre- quency and has infinite spatial extent. By contrast, basis functions that are localized to finite regions of input space necessarily comprise a spectrum of different spatial frequencies.

In many signal processing applications, it is of interest to consider ba- sis functions that are localized in both space and frequency, leading to a class of functions known as wavelets.

Most of the discussion in this chapter, however, is independent of the particular choice of basis function set, and so for most of our discussion we shall not specify the particular form of the basis functions.

Maximum likelihood and least squares

As before, we assume that the target variable t is given by a deterministic function with additive Gaussian noise so that

where is a zero mean Gaussian random variable with precision (inverse variance) . Thus we can write

Recall that, if we assume a squared loss function, then the optimal prediction, for a new value of x, will be given by the conditional mean of the target variable. In the case of a Gaussian conditional distribution of the form (3.1.8), the conditional mean will be simply

Note that the Gaussian noise assumption implies that the conditional distribution of t given is unimodal, which may be inappropriate for some applications.

Now consider a data set of inputs with corresponding target values . We group the target variables into a column vector that we denote by where the typeface is chosen to distinguish it from a single observation of a multivariate target, which would be denoted . Making the assumption that these data points are drawn independently from the distribution (3.1.8), we obtain the following expression for the likelihood function, which is a function of the adjustable parameters and , in the form

Note that in supervised learning problems such as regression (and classification), we are not seeking to model the distribution of the input variables.Thus will always appear in the set of conditioning variables, and so from now on we will drop the explicit from expressions such as in order to keep the notation uncluttered.Taking the logarithm of the likelihood function, and making use of the standard form for the univariate Gaussian, we have

where the sum-of-squares error function is defined by

Having written down the likelihood function, we can use maximum likelihood to determine and .

Consider first the maximization with respect to . As observed already before, we see that maximization of the likelihood function under a conditional Gaussian noise distribution for a linear model is equivalent to minimizing a sum-of-squares error function given by . The gradient of the log likelihood function (3.1.11) takes the form

Setting this gradient to zero gives

Solving for w we obtain

which are known as the normal equations for the least squares problem. Here is an N×M matrix, called the design matrix, whose element are given by , so that

The quantity is known as the Moore-Penrose pseudo-inverse of the matrix

It can be regarded as a generalization of the notion of matrix inverse to nonsquare matrices. Indeed, if is square aninvertible, then using the property we seet hat .

At this point, we can gain some insight into the role of the bias parameter . If we make the bias parameter explicit, then the error function (3.1.12) becomes

Setting the derivative with respect to equal to zero,, and solving for , we obtain

where we have defined

Thus the bias compensates for the difference between the averages (over the training set) of the target values and the weighted sum of the averages of the basis function values.

We can also maximize the log likelihood function (3.1.11) with respect to the noise precision parameter , giving

and so we see that the inverse of the noise precision is given by the residual variance of the target values around the regression function.

Geometry of least squares

See more detail picture of the geometry of least squares with Bing.com

Sequential learning

Batch techniques, such as the maximum likelihood solution (3.1.15), which involve processing the entire training set in one go, can be computationally costly for large data sets .If the data set is sufficiently large, it may be worthwhile to use sequential algorithms, also known as on-line algorithms, in which the data points are considered one at a time, and the model parameters up- dated after each such presentation. Sequential learning is also appropriate for real- time applications in which the data observations are arriving in a continuous stream, and predictions must be made before all of the data points are seen.

SGD

We can obtain a sequential learning algorithm by applying the technique of stochastic gradient descent, also known as sequential gradient descent, as follows. If the error function comprises a sum over data points , then after presentation of pattern n, the stochastic gradient descent algorithm updates the parameter vector using

where denotes the iteration number and is a learning rate patameter. The value of is initialized to some starting vector . For the case of the sum-of-squares error function (3.12), this gives

where . This is known as least-mean-squares or the LMS algorithm. The value of needs to be chosen with care to ensure that the algorithm converges

Regularized least squares

We have introduced the idea of adding a regularization term to an error function in order to control over-fitting, so that the total error function to be minimized takes the form

where is the regularization coefficient that controls the relative importance of the data-dependent error and the regularization term . One of the sim- plest forms of regularizer is given by the sum-of-squares of the weight vector ele- ments

If we also consider the sum-of-squares error function given by (3.1.12), then the total error function becomes

weight decay

This particular choice of regularizer is known in the machine learning literature as weight decay because in sequential learning algorithms, it encourages weight values to decay towards zero, unless supported by the data. In statistics, it provides an example of a parameter shrinkage method because it shrinks parameter values towards zero.It has the advantage that the error function remains a quadratic function of w, and so its exact minimizer can be found in closed form.

Specifically, setting the gradient of (3.1.24) with respect to w to zero, , and solving for w as before, we obtain

This represents a simple extension of the least-squares solution (3.1.15).

A more general regularizer is sometimes used, for which the regularized error takes the form

where corresponds to the quadratic regularizer (3.1.24).

LASSO

The case of is know as the lasso in the statistics literature. It has the property that if is sufficiently large, some of the coefficients are driven to zero, leading to a sparse model in which the corresponding basis functions play no role. To see this, we first note that minimizing (3.26) is equivalent to minimizing the unregularized sum-of-squares error (3.1.12) subject to the constraint

for an appropriate value of the parameter η, where the two approaches can be related using Lagrange multipliers.

Here is the visualaztion of the pictures Regularization lasso Picture from Bing.com

Multiple outputs

So far, we have considered the case of a single target variable t. In some applications, we may wish to predict K > 1 target variables, which we denote collectively by the target vector . This could be done by introducing a different set of basis functions for each component of t, leading to multiple, independent regression problems. However, a more interesting, and more common, approach is to use the same set of basis functions to model all of the components of the target vector so that

The Bias-Variance Decomposition

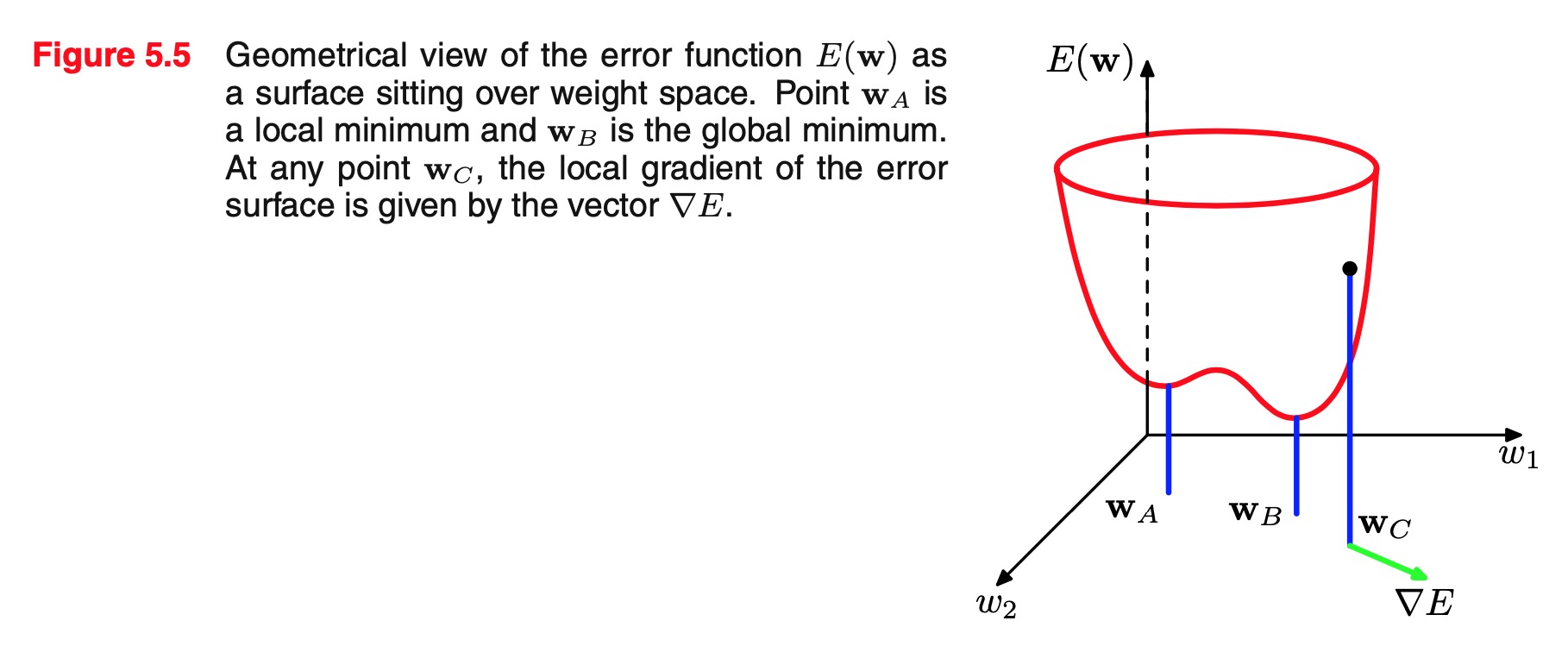

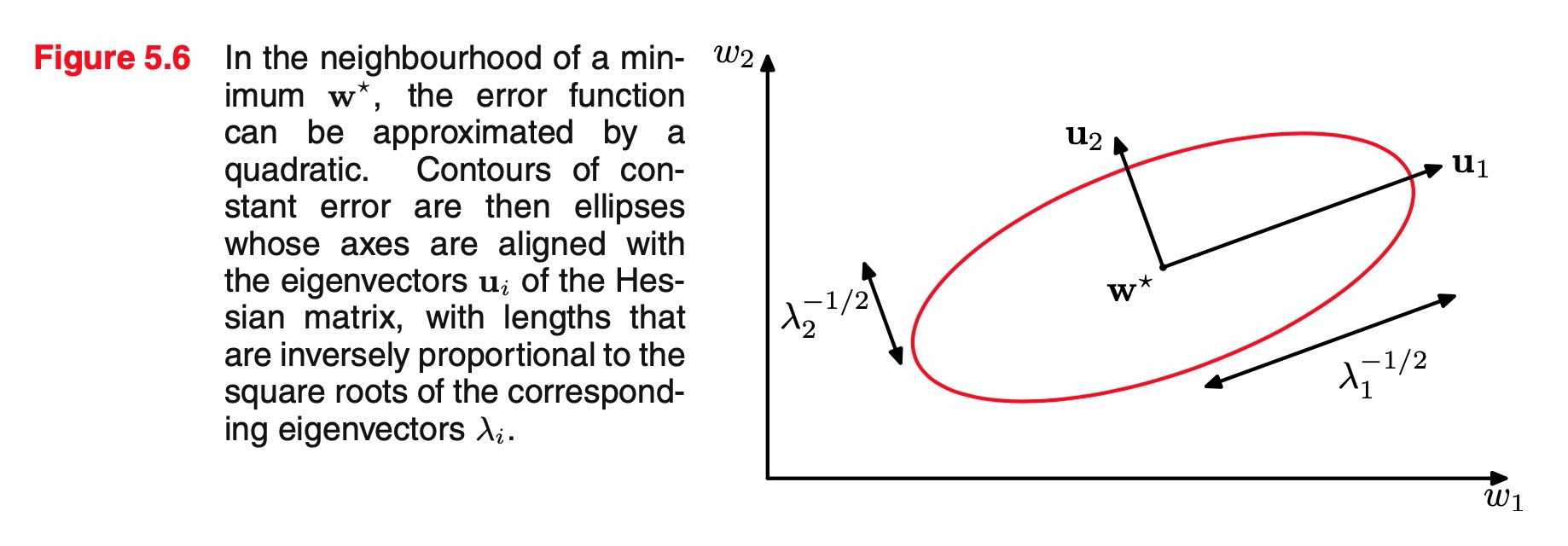

So far in our discussion of linear models for regression, we have assumed that the form and number of basis functions are both fixed. As we have seen, the use of maximum likelihood, or equivalently least squares, can lead to severe over-fitting if complex models are trained using data sets of limited size. However, limiting the number of basis functions in order to avoid over-fitting has the side effect of limiting the flexibility of the model to capture interesting and important trends in the data. Although the introduction of regularization terms can control over-fitting for models with many parameters, this raises the question of how to determine a suitable value for the regularization coefficient .

Seeking the solution that minimizes the regularized error function with respect to both the weight vector and the regularization coefficient is clearly not the right approach since this leads to the unregularized solution with .

In Section 1.6.5, when we discussed decision theory for regression problems, we considered various loss functions each of which leads to a corresponding optimal prediction once we are given the conditional distribution . A popular choice is the squared loss function, for which the optimal prediction is given by the conditional expectation, which we denote by and which is given by

At this point, it is worth distinguishing between the squared loss function arising from decision theory and the sum-of-squares error function that arose in the maximum likelihood estimation of model parameters.

We showed in Section 1.6.5 that the expected squared loss can be written in the form

Recall that the second term, which is independent of , arises from the intrinsic noise on the data and represents the minimum achievable value of the expected loss.

The first term depends on our choice for the function , and we will seek a solution for which makes this term a minimum.Because it is nonnegative, the smallest that we can hope to make this term is zero. If we had an unlimited supply of data (and unlimited computational resources), we could in principle find the regression function to any desired degree of accuracy, and this would represent the optimal choice for . However, in practice we have a data set D containing only a finite number N of data points, and consequently we do not know the regression function exactly.

Expected Loss

If we model the using a parametric function governed by a parameter vector , then from a Bayesian perspective the uncertainty in our model is expressed through a posterior distribution over . A frequentist treatment, however, involves making a point estimate of based on the data set , and tries instead to interpret the uncertainty of this estimate through the following thought experiment. Suppose we had a large number of data sets each of size and each drawn independently from the distribution . For any given data set , we can run our learning algorithm and obtain a prediction function . Different data sets from the ensemble will give different functions and consequently different values of the squared loss. The performance of a particular learning algorithm is then assessed by taking the average over this ensemble of data sets.

We now take the expectation of this expression with respect to D and note that the final term will vanish, giving

We see that the expected squared difference between and the regression function can be expressed as the sum of two terms.

The first term, called the squared bias, represents the extent to which the average prediction over all data sets differs from the desired regression function.

The second term, called the variance, measures the extent to which the solutions for individual data sets vary around their average, and hence this measures the extent to which the function is sensitive to the particular choice of data set.

We shall provide some intuition to support these definitions shortly when we consider a simple example.

expected loss = (bias)2 + variance + noise

Bayesian Linear Regression

[TODO]

Bayesian Model Comparison

[TODO]

The Evidence Approximation

[TODO]

Limitations of Fixed Basis Functions

Throughout this chapter, we have focussed on models comprising a linear combina- tion of fixed, nonlinear basis functions. We have seen that the assumption of linearity in the parameters led to a range of useful properties including closed-form solutions to the least-squares problem, as well as a tractable Bayesian treatment. Furthermore, for a suitable choice of basis functions, we can model arbitrary nonlinearities in the mapping from input variables to targets.

However

The difficulty stems from the assumption that the basis functions are fixed before the training data set is observed and is a manifestation of the curse of dimen- sionality discussed in Section 1.5. As a consequence, the number of basis functions needs to grow rapidly, often exponentially, with the dimensionality D of the input space.

Linear Models for Classification

We have explored a class of regression models having particularly simple analytical and computational properties. We now discuss an analogous class of models for solving classification problems.

GOAL

The goal in classification is to take an input vector and to assign it to one of K discrete classes where . In the most common scenario, the classes are taken to be disjoint, so that each input is assigned to one and only one class. The input space is thereby divided into decision regions whose boundaries are called decision boundaries or decision surfaces. we consider linear models for classification, by which we mean that the decision surfaces are linear functions of the input vector x and hence are defined by (D − 1)-dimensional hyperplanes within the D-dimensional input space.

Data sets whose classes can be separated exactly by linear decision surfaces are said to be linearly separable.

In the case of classification, there are various ways of using target values to represent class labels.

In the linear regression models considered in Chapter 3, the model prediction was given by a linear function of the parameters . In the simplest case, the model is also linear in the input variables and therefore takes the form , so that y is a real number. For classification problems, however, we wish to predict discrete class labels, or more generally posterior probabilities that lie in the range (0, 1). To achieve this, we consider a generalization of this model in which we transform the linear function of using a nonlinear function so that

In the machine learning literature is known as an activation function, whereas its inverse is called a link function in the statistics literature.The decision surfaces correspond to , so that and hence the deci- sion surfaces are linear functions of , even if the function is nonlinear.

Discriminant Functions

A discriminant is a function that takes an input vector and assigns it to one of classes, denoted . In this chapter, we shall restrict attention to linear discriminants, namely those for which the decision surfaces are hyperplanes. To simplify the discussion, we consider first the case of two classes and then investigate the extension to K > 2 classes.

Two classes

The simplest representation of a linear discriminant function is obtained by tak- ing a linear function of the input vector so that

where is called a weight vector, and is a bias (not to be confused with bias in the statistical sense). The negative of the bias is sometimes called a threshold. An input vector is assigned to class if and to class otherwise. The corresponding decision boundary is therefore defined by the relation ,

Reduction

with point line on the decision surface. then

that means and , so

1: the vector is orthogonal to every vector lying within the decision surface, determines the orientation of the decision surface.

2: bias parameter determines the location of the decision surface.

Multiple classes

Considering a single K-class discriminant comprising K linear functions of the form and then assigning a point to class if for all . The decision boundary between class and class is therefore given by and hence corresponds to a (D − 1)-dimensional hyperplane defined by

This has the same form as the decision boundary for the two-class case discussed in Section 4.1.1, and so analogous geometrical properties apply.

Least squares for classification

Each class is described by its own linear model so that , where . We can conveniently group these together using vector notation so that

where is a matrix whose column comprises the D + 1-dimensional vector and is the corresponding augmented input vector with a dummy input = 1. A new input is then assigned to the class for which the output is largest.

We now determine the parameter matrix by minimizing a sum-of-squares error function.

if we use a 1-of-K coding scheme for K classes, then the predictions made by the model will have the property that the elements of y(x) will sum to 1 for any value of x. However, this summation constraint alone is not sufficient to allow the model outputs to be interpreted as probabilities because they are not constrained to lie within the interval (0, 1).

insufficient